Physicist: Chaos theory, despite what Jurassic Park may lead you to believe, has almost nothing to do with making actual predictions, and is instead concerned with trying to figure out how much we can expect our predictions to suck.

“Pure chaos” is the sort of thing you might want to argue about in some kind of philosophy class, but there are no examples of it in reality. For example, even if you restrict your idea of chaos to some method of picking a number at random, you find that you can’t get a completely unpredictable number. There will always be some numbers that are more likely or less likely. Point is; “pure chaos” and “completely random” aren’t real things.

“Chaos” means something very particular to its isolated and pimply practitioners. Things like dice rolls or coin flips may be random, but they’re not chaotic.

Dr. Ian Malcolm, shown here distracting a T-rex with a road flare, is one of the few completely accurate depictions of a chaos theorist in modern media.

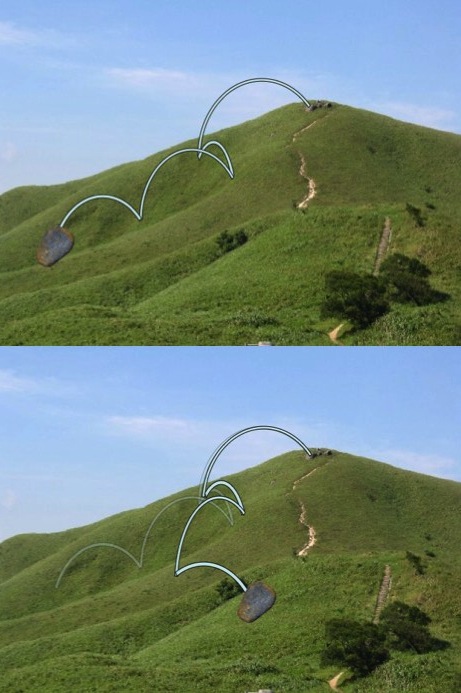

A chaotic system is one that has well understood dynamics, but that also has strong dependence on its initial conditions. For example, if you roll a stone down a rocky hill, there’s no mystery behind why it rolls or how it bounces off of things in its path (well established dynamics). Given enough time, coffee, information, and slide rulers, any physicist would be able to calculate how each impact will affect the course of the tumbling stone.

Although the exact physics may be known, tiny errors compound and trajectories that start similarly end differently. Chaos!

But there’s the problem: no one can have access to all the information, there’s a diminishing return for putting more time into calculations, and slide rulers haven’t really been in production since the late 70’s.

So, when the stone hits another object on it’s ill-fated roll down the hill, there’s always some error with respect to how/where it hits. That small error means that the next time it hits the ground there’s even more error, then even more, … This effect; initially small errors turning into huge errors eventually, is called “the butterfly effect“. Pretty soon there’s nothing that can meaningfully be said about the stone. A world stone-chucking expert would have no more to say than “it’ll end up at the bottom of the hill”.

Chaotic systems have known dynamics (we understand the physics), but have a strong dependence on initial conditions. So, rolling a stone down a hill is chaotic because changing the initial position of the stone can have dramatic consequences for where it lands, and how it gets there. If you roll two stones side by side they could end up in very different places.

Putting things in orbit around the Earth is not chaotic, because if you were to put things in orbit right next to each other, they’d stay in orbit right next to each other. Slight differences in initial conditions result in slight differences later on.

The position of the planets, being non-chaotic, can be predicted accurately millennia in advance, and getting more accurate information makes our predictions substantially more accurate. But, because weather is chaotic, a couple of days is the best we can reasonably do (ever). Doubling the quality of our information or simulations doesn’t come close to doubling how far into the future we can predict. Few hours maybe?

Answer gravy: When you’re modeling a system in time you talk about its “trajectory”. That trajectory can be through a real space, like in the case a stone rolling down a hill or a leaf on a river, or the space can be abstract, like “the space of stock-market prices” or “the space of every possible weather pattern”. With the rock rolling down the hill you just need to keep track of things like: how fast and in which direction it’s moving, its position, how it’s rotating, that sort of thing. So, at least 6 variables (3 for position, and 3 for velocity). As the rock falls down the hill it spits out different values for all 6 variables and traces out a trajectory (if you restrict your attention to just it’s position, it’s easy to actually draw a picture like above). For something like weather you’d need to keep track of a hell of a lot more. A good weather simulator can keep track of pressure, temperature, humidity, wind speed and direction, for a dozen layers of atmosphere over every 10 mile square patch of ground on the planet. So, at least 100,000,000 variables. You can think of changing weather patterns around the world as slowly tracing out a path through the 100 million dimensional “weather space”.

Chaos theory attempts to describe how quickly trajectories diverge using “Lyapunov exponents“. Exponents are used because, in general, trajectories diverge exponentially fast (so there’s that). In a very hand-wavy way, if things are a distance D apart, then in a time-step they’ll be Dh apart. In another time-step they’ll be (Dh)h = Dh2 apart. Then Dh3, then Dh4, and so on. Exponential!

Because mathematicians love the number e so damn much that they want to marry it and have dozens of smaller e’s (eeeeeeee), they write the distance between trajectories as , where D(t) is the separation between (very nearby) trajectories at time t, and D0 is the initial separation.

is the Lyapunov exponent. Generally, at about the same time that trajectories are no longer diverging exponentially (which is when Lyapunov exponents become useless) the predicting power of the model goes to crap, and it doesn’t matter anyway.

Notice that if is negative, then the separation between trajectories will actually decrease. This is another pretty good definition of chaos: a positive Lyapunov exponent.

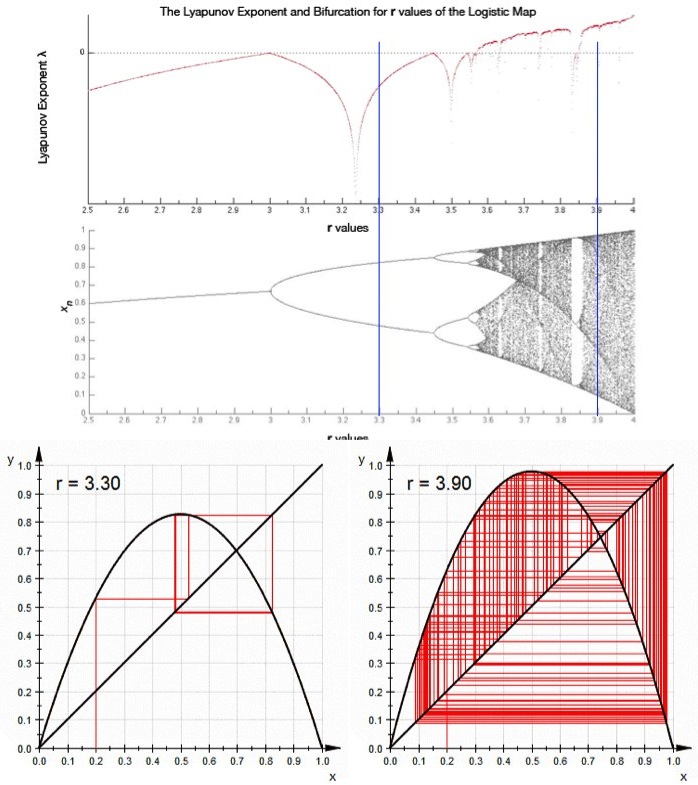

A beautiful, and more importantly fairly simple, example of chaos is the “Logistic map”. You start with the function , pick any initial point

between 0 and 1, then feed that into f(x). Then take what you get out, and feed it back in. That is; x1 = f(x0), x2 = f(x1), … This is written “recursively” as “

“. The reason this is a good model to toy around with is that you can change r and get wildly different behaviors.

For 0<r<1, xn converges to 0, regardless of the initial value, x0. So, nearby initial conditions just get closer together, and .

For 1<r<3, xn converges to one point (specifically,), regardless of x0. So, again,

.

For 3<r<3.57, xn oscillates between several values. But still, regardless of the initial condition, the values of xn still settle into the same set or values (same “trajectory”).

But, for 3.57<r, xn just bounces around and never converges to any particular value. When you pick two initial values close to each other, they stay close for a while, but soon end up bouncing between completely unrelated values (their trajectories diverge, exponentially). The is the “chaotic regime”. .

(bottom) Many iterations of the logistic map for r = 3.30 (2 value region) and r = 3.9 (chaotic region). (middle) The values that Xn converge to, regardless of Xo, for various values of r. The blue lines correspond to the examples. (top) Lyapunov exponent of the Logistic map for various values of r. Notice that the value is always zero or negative until the system becomes chaotic.

Long story short, chaos theory is the study of how long you should trust what your computer simulations say before you should start ignoring them.

Good article, but you made a mistake when you state that two objects placed in orbit right next to each other will remain next to each other. Orbits follow a circular plane and as such there are no parallel paths along them.

You’re absolutely right.

I was trying not to go into too much detail on that. Two things in orbit next to each other will drift back and forth and switch positions twice an orbit. The important thing (chaotically speaking) is that if they start at a very small distance from each other, that distance will not increase exponentially. In fact, in this case, the distance stays bounded.

technically they are not in circular planes, they are actually in a perpetual state of free fall (straight line). it just so happens that the gravitational attraction makes it appear to be circular because the earth happens to be mostly round. both sides of the argument can be validated, so why even bring this point up? 😮

Straight lines through a curved spacetime, sure.

But I don’t think anybody wants to go all general relativistic in here.

The important thing (if it can be so called) is that I made an omission on the behavior of orbiting stuff, that PJ caught.

Why do chaotic trajectories diverge exponentially at all. I mean they could have diverged via some other mechanism, but the exponential divergence is one that’s taken. Why so?

Pingback: Q: Is quantum randomness ever large enough to be noticed? | Ask a Mathematician / Ask a Physicist

Pingback: Q: What makes natural logarithms natural? What’s so special about the number e? | Ask a Mathematician / Ask a Physicist

You can think of it (simply) in terms of a tree diagram, where each divergence has divergences based in it. So, if each set unit of time corresponds to one branching of the tree diagram you are constructing, and there are two options possible at each branch, then for time (branch) one, there are two options. At the next branch point, there are two more options for each of the original options, for a total of four. At the next branch point, there are 8, and so on, increasing exonentially in number. Essentially, if you randomly choose one variable at each branch point, and consider only one to be the TRUE answer at the final end of our tree diagram, for each successive branch point we have a correspondingly exponentially smaller chance of being right. So, the first branch point yields a 1/2 chance of being right, the next, only a 1/4 chance (because you had to be right BOTH times), and choosing incorrectly the first time means you didn’t even get a shot at the two that stemmed from the correct random selection at branch point one,, and the next, a 1/8 chance, then 1/16, then 1/32, next 1/64, and so on. Exonentially diverging.

hi.

two questions:

1. if you bend a wire couple times the wire becomes hot and finally breaks. why is this happen?

2. if you pour to the bottle or a glass a few different fluids. let’s say a water and an oil. they don’t mix together. after a while they separate. how do we call that process?

sincerely,

aj

Pingback: Q: Why are many galaxies, our solar system, and Saturn’s rings all flat? | Ask a Mathematician / Ask a Physicist

Pingback: Q: What is the three body problem? | Ask a Mathematician / Ask a Physicist