The original question was: Information doesn’t behave like any other quantifiable property. For example, to describe something which can be in any of 8 states, I would need 3 bits of information. To describe something which can be in any of 5 states you also need 3 bits of information. This implies that the amount of information needed to describe a compound system is not a function of the amount of information needed to describe each component system.

Physicist: The amount of information in a compound system is the sum of the amounts of information needed to describe its components (assuming those components are independent). However, describing information in bits (0’s and 1’s), gives you the impression that the number of bits in something should be a whole number. After all, how can something have 1.5 bits of information? Either there’s a single 0 or 1, or there are two 0’s or 1’s. As it happens, you can have a non-integer number of bits, but the reasoning behind exactly how is a little subtle.

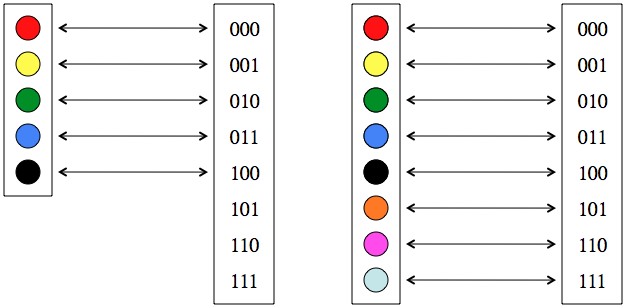

In the 8-state example it takes exactly 3 bits to specify a state. Three bits are needed to specify any one state, and any one state can specify any 3 bit combination.

Two 8-state systems together are a 64-state system that takes 6 bits to describe (6=3+3). Easy nuff.

It takes 3 bits to distinguish between 5 things, but 3 bits contain a little more information; enough to distinguish between 8 things.

In the 5 state example it takes 3 bits to specify the state, however the state cannot specify any 3 bit combination. This means that a 5-state system contains less than 3 bits and yet somehow more than 2.

In general, 1 bit can distinguish between 2 things, 2 bits can distinguish between 4, 3 can distinguish between 8, and N bits can distinguish between 2N things. So if you’ve got M states, and M < 2N, then N bits will be enough to distinguish between them. For example, it takes 5 bits to specify a Greek, English, or Arabic letter (24, 26, and 28 characters) and 6 bits to specify a Cyrillic letter (33 characters). Taking the log base 2 of both sides turns M < 2N, into log2(M) < N. That is, the number of bits you need to distinguish between M states is about log2(M).

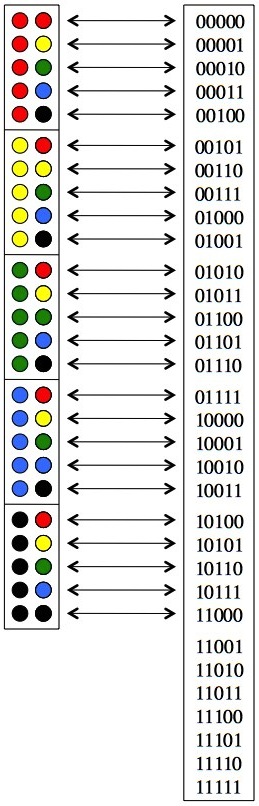

Two 5-state systems together are the same as a 25-state system. It only takes 5 bits to describe a 25-state system (25 < 32 = 25), as opposed to the 6 you might guess from the fact that 3 bits are needed to describe each of the 5-state systems individually.

A pair of 5-state systems can be arranged in 25 combinations and can be described by 5 bits (which have 32 combinations).

Keep in mind that information theory originally branched off of communication theory. So rather than thinking about describing a single system, information theorists think about describing lots of systems, one after another, like letters or numbers (like what you’re reading now), and then conveying that description to someone else. Often, you can save a little data by describing several states at the same time. In this case, by describing pairs of 5-state systems, instead of describing each system one at a time, you save 1 bit.

The more M-state systems you describe all at once the closer you’ll get to log2(M). If you have a lot of 5 state systems and you describe them together you find that it’ll take approximately log2(5) = 2.3219 bits (22.3219 ≈ 5) to describe each system on average. For example, if you describe 100 5-state systems together you’re describing a 5100-state system (forgive me for not writing out the entire number), and you’ll need 233 bits. 2.33 bits per system, by the way, is pretty close to log2(5).

Answer Gravy: Even cooler, since information is defined in terms of a system being described over and over, it’s affected by probabilities (a single instance of something doesn’t really have probabilities). If the system you’re trying to describe tends to show up in some states more than others (like “e” in English), then it would be nice to describe it with a shorter series of bits than rarer states (like “q” in English). It turns out (and it’s not immediately obvious why, so don’t stress) that the information, I, of a system with states 1, 2, 3, …, n, where the probability of each state is given by p1, p2, p3, …, pn, is I = -p1log2(p1) – p2log2(p2) – … – pnlog2(pn). When the probabilities are all the same (), then this reduces to what the post has been talking about so far: I = log2(n).

So, for example, if you see A 1/2 the time, and B and C 1/4 of the time, then in bits, the average amount of information in the system is

You can optimally encode this system into bits using “Huffman coding“: A=0, B=10, and C=11. So for example, 0111101110001010010 = ACCACBAABBAB. The average length of the code for each letter is (length of code times probability), which is a little shorter than log2(3) = 1.585. In Huffman coding the most commonly seen state is always assigned “0”, leading to devastatingly funny comp-sci jokes like “0 = screw you”.

The effect of probabilities on written English (like, e’s are common, q is always followed by u, every word has a vowel, etc.), according to Shannon’s somewhat ad-hoc experiments, reduces the information from the expected 4.7 bits (log2(26)) per letter to only about 2.3 bits per letter.

So, you can increase information density by shortening the code for the most commonly used things. This happens very naturally in everyday speech. Those things more likely to be talked about tend to have shorter names. For example, “the world wide computer network that the kids today are so excited about”, “the currently presiding president of the United States of America”, and “the dog with whom I live, for which I feel nothing, that from time to time responds to the name ‘Professor Emeritus Meowington III, esq.’ “, are almost always referred to in much more compact forms like “the net”, “the president”, and “the dog”.

It’s from here.