Physicist: Nope! Calculus is exact. For those of you unfamiliar with calculus, what follows is day 1.

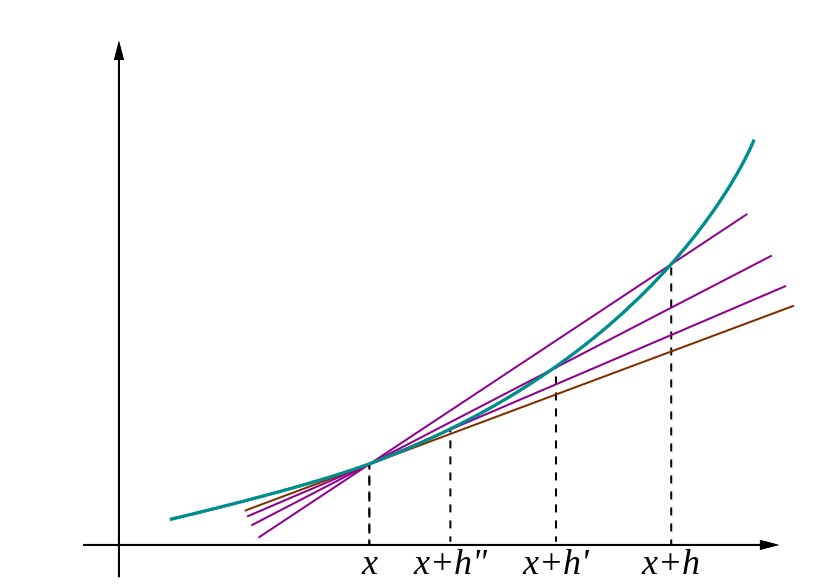

In order to find the slope of a curve at a particular point requires limits, which always feel a little incomplete. When taking the limit of a function you’re not talking about a single point (which can’t have a slope), you’re not even talking about the function at that point, you’re talking about the function near that point as you get closer and closer. At every step there’s always a little farther to go, but “in the limit” there isn’t. Here comes an example.

Say you want to find the slope of f(x) = x2 at x=1. “Slope” is (defined as) rise over run, so the slope between the points and

is

and it just so happens that:

Finding the limit as is the easiest thing in the world: it’s 2. Exactly 2. Despite the fact that h=0 couldn’t be plugged in directly, there’s no problem at all. For every h≠0 you can draw a line between

and

and find the slope (it’s 2+h). We can then let those points get closer together and see what happens to the slope (

). Turns out we get a single, exact, consistent answer. Math folk say “the limit exists” and the function is “differentiable”. Most of the functions you can think of (most of the functions worth thinking of) are differentiable, and when they’re not it’s usually pretty obvious why.

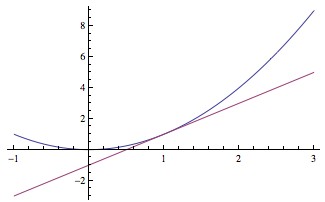

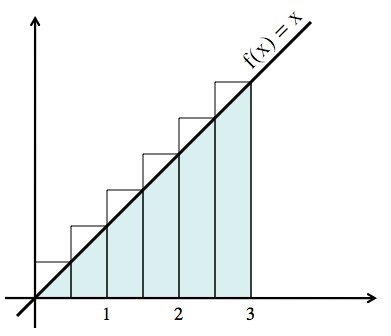

Same sort of thing happens for integrals (the other important tool in calculus). The situation there is a little more subtle, but the result is just as clean. Integrals can be used to find the area “under” a function by adding up a larger and larger number of thinner and thinner rectangles. So, say you want to find the area under f(x)=x between x=0 and x=3. This is day… 30 or so?

As a first try, we’ll use 6 rectangles.

Each rectangle is 0.5 wide, and 0.5, 1, 1.5, etc. tall. Their combined area is or, in mathspeak,

. If you add this up you get 5.25, which is more than 4.5 (the correct answer) because of those “sawteeth”. By using more rectangles these teeth can be made smaller, and the inaccuracy they create can be brought to naught. Here’s how!

If there are N rectangles they’ll each be wide and will be

tall (just so you can double-check, in the picture N=6). In mathspeak, the total area of these rectangles is

The fact that is just one of those math things. For every finite value of N there’s an error of

, but this can be made “arbitrarily small”. No matter how small you want the error to be, you can pick a value of N that makes it even smaller. Now, letting the number of rectangles “go to infinity”,

and the correct answer is recovered: 9/2.

In a calculus class a little notation is used to clean this up:

Every finite value of N gives an approximation, but that’s the whole point of using limits; taking the limit allows us to answer the question “what’s left when the error drops to zero and the approximation becomes perfect?”. It may seem difficult to “go to infinity” but keep in mind that math is ultimately just a bunch of (extremely useful) symbols on a page, so what’s stopping you?

Mathematicians, being consummate pessimists, have thought up an amazing variety of worst-case scenarios to create “non-integrable” functions where it doesn’t really make sense to create those approximating rectangles. Then, being contrite, they figured out some slick ways to (often) get around those problems. Mathematicians will never run out of stuff to do.

Fortunately, for everybody else (especially physicists) the universe doesn’t seem to use those terrible, terrible… terrible worst-case functions in any of its fundamental laws. Mathematically speaking, all of existence is a surprisingly nice place to live.

Actually, if you look at calculus using infinitesimals, ala robinson et al., the derivative, and the integral, or more precisely the real numbers we get to measure them, are approximations; just approximations where the error is infinitely small.

For this to make sense, we must first precisely define what is meant by the word infinitesimal: a number x is an element of the set of infinitesimals iff the absolute value of x is smaller than any real number. A corollary of this definition is that the only real number that is an infinitesimal is 0. Next, to get calculus from this, we can extend the real number system, to the hyperreals, which includes infinitesimals. Here, you can have positive infinitesimals, or “infinitely small” numbers that are still larger than 0, that were so talked about by mathematicians in older times.

Now to define the derivative: we start with the hyperreal derivative: f'(x)* = [f (x + h)* – f (x)* ] / h, where h is a nonzero infinitesimal. Now in the example given above, the hypereal derivative was 2 + h. Then to get the real valued derivative, you just drop the h. Thus you can see that the real derivative is just an approximation of the hyperreal derivative, with the error being h. A similar method can be used for integrals.

Thus, the (real valued) answers gotten in calculus are just an approximation (albeit with infinitesimal error) to the hyperreal values. So in essence, yes, calculus is just an approximation, but one with almost perfect accuracy.

@Josh Evans:,

Your argument is both correct and incorrect. There is a reason why hyper-reals and infinitesimals are not used by mathematicians in consensus: you need to break and bend a bunch of arithmetic rules and basic math properties in order for it to work. They do not go well with how mathematics are applied in reality. Infinitesimals cannot exist in reality with the rules as in the consensus. Our concept of perfection and accuracy is based in those rules. Therefore, if limits for real numbers involve perfection, then accuracy with hyper-real numbers implies accuracy “better than perfection,” which does not make absolute sense at all. That is just as absurd as saying bigger than infinity. So, while your argument makes sense, it isn’t applicable to reality.

My dear friends the curve and infinity will always remain beyond us, when we measure we break down to pieces and therefore cut up the infinite curve.

Thanks for the run down, I’ve been out of the loop with maths since doing my A levels 10 years ago and I’m now attempting a final year module in cosmology with a lot of rust to grind of. Just went through the derivation of the equations for gravitational contraction via the vitrial theorem and was like.. I remember that integration plays with infinities but how can we just.. state that at small enough values n becomes small enough to not care about when we are dealling with literal subatomic particles. It seemed irresponsible, but now I can see the necessity of the evil. I agree with Josh on this, too, there is no true exact value, we are more concerned with reducing the error to negligibility. It just begs the question, how ssmall is negligible when you’re dealing with relativistic speeds and fundamental particles. I have a gut feeling I’ll trust cosmology even LESS after this module haha!