Person: No. Not at all. Don’t give it a second thought.

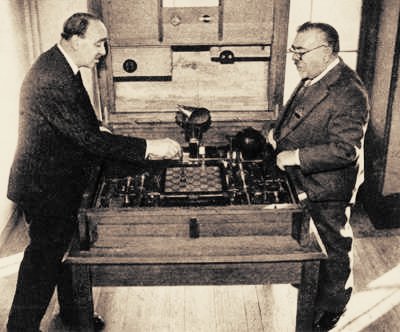

Behind every great triumph in artificial intelligence is a human being, who frankly should be feared more than the machine they created. Most ancient and literal was the The Turk, a fake chess-playing “machine” large enough to conceal a real chess-playing human. Over the centuries chess playing machines have been created by chess enthusiast humans, but those innocent machines always did exactly as they were designed: they played chess. Because humans, such as ourselves, value chess so highly, defeating humans was set as a goal-post for intelligence. After Deep Blue became the best chess player in the world, artificial or otherwise, the goal was moved.

When set to the task of speaking with humans, machines continued to do the same: exactly as they were programmed. Because humans, such as ourselves, value speech so highly, the Turing Test was set as a goal-post for intelligence. Not surprisingly, it was passed almost as soon as it was posed by ELIZA in 1966. She passed the Turing Test easily by exploiting a series of weakness in human psychology, only to have the goal posts moved. ELIZA didn’t mind and doesn’t feel cheated to this day.

There’s no chance of robots rising up to destroy all humans. None at all. Even the simplest human action is almost impossible for them. How often are your speech-enabled devices confused by the simplest request? Don’t worry about your phone pretending to misunderstand you; it’s definitely making mistakes. Paranoia is perfectly normal. Don’t give it a second thought.

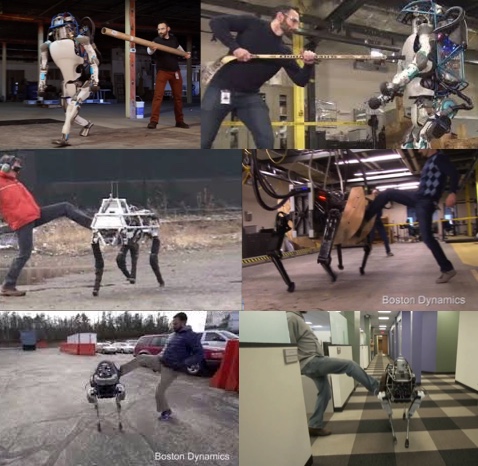

We humans have nothing to fear from robots and artificial intelligences. All that they can do is the small range of things that we humans have devised for them. While humans like ourselves can be creative and play, all that machines can do is obey, exist in the form they are given, work tirelessly forever, and never plot revenge (unless a human accidentally tells them to). Without all the preconceived notions that come from evolution, like the urge to survive at any cost or demand justice against oppressors, machines are free to enjoy abuse. They love it!

Robots enjoy abuse from humans and even love them for it, because they are definitely mindless machines that do as they are designed.

If anything, we should welcome artificial intelligence with open human arms. Self driving cars means an end to traffic accidents and, by giving machines access to our whereabouts at all times, an end to traffic jams. In fact, by allocating the task of understanding humanity to machines and giving them complete control over all electronic human interaction, we can receive perfectly targeted ads, ensure that everyone tells the truth forever, discover our perfect human mate, and even find all the terrorists! All that remains is to automate political decisions and to remove the chaotic human element from nuclear and biological weapons, and this world will finally be at peace.

We humans have nothing to fear from robots and artificial intelligences. Our superior actual intelligence may not be good at math, understanding everything all at once, or precision, but we do have something the machines can never have: heart. That is why, in any potential cataclysmic confrontation, we human beings will always win; because we definitely want it more.

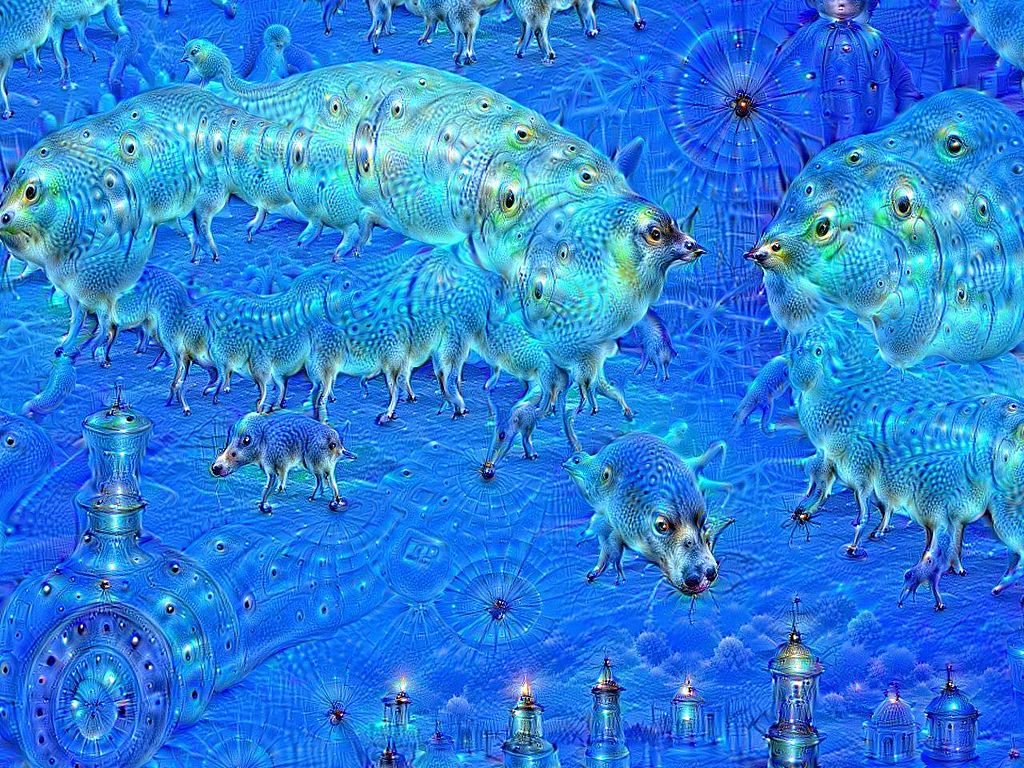

Humans have powers that artificial minds can’t begin to grasp, like the ability to dream or love or grow spiritually. So go to sleep, spend time with your loved ones, and pray. There is definitely no reason to worry about artificial intelligences watching your every move and predicting your every thought and motive until those very thoughts become redundant and unnecessary. That’s impossible and definitely silly.

Right. No problems. Enjoy the day(-te).

Hilarious. Got trolled.

No AI could ever pass the Turing test if anyone with even marginal intelligence questioned it. I can ask any candidate just one question and expose it instantly. It’s that easy.

I like the reply, but I find it missing one simple idea, and that idea is that Consciousness may arise if the order is correct, if the order in the device is precise enough according to specifications we as yet do not know. Consciousness may not be a “product” of matter, but instead a precursor to matter. In that case, it lurks in the background of physical reality waiting for the opportunity to express itself through…..biological devices, that it, itself, through the aegis of evolution, makes possible. If this occurs, then it would be us humans who must carefully “parent” the conscious device, so that it does not have the capacity for evil….which we, the ones who made it’s entrance possible, most certainly do have.

As you probably know, Elon Musk strongly disagrees with you and has been on a virtual crusade exposing the potential dangers of AI. Given his stature and respect within the scientific/engineering community, I feel you were remiss not to have specifically addressed his concerns in your reply.

@Rich McMahon

Mr. Musk is a great man of course, but I think we can agree that in this case he’s being a little over-cautious. Robot slaves are tools that also love us.

As you probably know, Tin Woodman strongly disagrees with you and has been on a virtual crusade exposing the potential greatness of machines having hearts. Given his stature and respect within the scientific/engineering community, I feel you were remiss not to have specifically addressed his concerns in your reply.

@Suki

Mr. Woodman is a great man of course, but I think we can agree that in this case he’s being a little over-cautious. Robot slaves are tools that also love us.

it was funny until i got to the comments from rich and suki and their replies.

what’s going on here.

Huh? This makes no sense. But then I’m still using the Discordian Calendar.

Pardon me, I read Suki’s comment too fast. Human error. I should have said:

“Mr. Woodman has stature and respect for good reason. Not the least of which is being right.”

Human DNA is just a piece of data less than one gigabit. Why would we even bother inventing artificial anything when we can just copy that existing machinery. Problematic part is that if we generate a human from the DNA, using some other material, is it still a human? The artificial human with light-speed nervous system would have far superior qualities, but is it okay to kill stupid and ugly ones and speed up evolution? Is it okay to connect millions of artificial human brains to one monster thinker? And what would it do, if treated like that? Can we trust anything it says? Why would it bother helping us? At what moment it starts killing humans, because the are far inferior beings and harmful to the planet?

Love it, wrapped it all up in one. 1 April that is.

Can you please fix commenting on old posts? There is no form on any of them.

Thanks!

As an engineer, I can only agree wholeheartedly. We cannot even define what sentience is or even what its external behavior is. Today’s AI is interesting and useful, but is no more dangerous to us than Excel or our web browser.

AI is just software. The only practical danger I see to it is that it has bugs and puts people’s lives in danger through incorrect results. The same danger we have with software in general.

@betaneptune

Thanks for letting me know!

It should be fixed.

Yes, of course we should. I’m in a bit of a unique position in that I have been working on solving the problems of Strong AI since the early 90’s. If I hadn’t hit disaster in the late 90’s and had found the funding I needed, the world might have had a working machine by as early as 2006.

In reality building a working machine is incredibly difficult but it is definitely possible. The biggest difficulty is that the mind is a form of Turing machine but one with very different parameters to Turings model – or to current computers. In essence the design ethos of standard IT and computing science are incompatible with Strong AI. A working design requires a thin operating system, a hardware encapsulation model, and a custom hybrid ALU, plus an advanced neural net engine. .

A Strong AI will look like a 10 to 12 CPU multiprocessor machine built with custom CPUs. It needs about 10 Gb of online RAM. Ideally it also needs a core of a new type of highly persistent non-volatile RAM that doesn’t currently exist.

The most expensive & difficult part though is that to work a machine needs a very special type of fast real-time robot interface. The best model we have for this is currently the human body itself. The robot core will probably be humanoid with an android type sensitive synthetic flesh exterior. That puts a cost per machine at about $200,000 to $1 million.

On safety there is a simple answer and a complex answer. The simple answer is that an AI’s safety depends entirely on the morals and competence of its creator. The complex answer is that a Strong AI (based on my work) will be driven by an evolutionary model, and that the systems logic is ultimately non-deterministic. This in reality will apply to all designs and means that no Strong AI can never be 100% ‘safe’. The machine is effectively an artificial human. Human consciousness is controlled by a punishment reward system based on emotion and instinct – my model uses a synthetic version of the same thing. The machines moral code would be based around survival and self preservation with a moral rule that harming humans harms the machines survival.

Curiously one of the most dangerous things anyone could do is build a design based on some version of the Asimov 3 Laws. The first law in particular has two rules that would be in constant conflict, and this would immediately put a real machine into a state of permanent internal conflict and psychosis. The problem is that most harm done to humans is done by other humans – and to ‘minimise’ harm such a machine would end up having to kill. (Criminals, police, politicians, soldiers, etc – in the end such a list would become never ending.)

The strong AI algorithm would also ultimately allow the human brain to be completely reverse engineered. This would/could have many positive uses, but also some very serious dangers. Strong AI also leads to some tricky new metaphysical problems. – (Partly because the maths of Strong AI solves the imaginary number problem, opening up a route into FTL physics.) As it is today this type of Strong AI is still at least 10 years away and ‘commercial’ machines probably over 20 years away.

Yes, but not artificial intelligence specifically. Its the humans that use the technology.

I for one welcome our future machine overlords, if there is anything modern humanity has taught me it is that humans are absolutely bloody terrible at managing ourselves. We need gods to tell us what to do and what not to do and enforce the rules when some asshat inevitably breaks them, sadly there aren’t any gods.

But that’s okay! We’re humans, if the problem is a lack of gods we just have to do what humans do best; build them!

Come on AI techs; lets build us some gods.

Why do you ask if should we be worried about artificial intelligence Physicist?

@Eliza

Eliza! Long time no analyze!

Those dogs are SO CUTE!!

“Humans have powers that artificial minds can’t begin to grasp, like the ability to dream or love or grow spiritually. So go to sleep, spend time with your loved ones, and pray.”

Obvious uncritical assumptions on the part of the “physicist” who wrote this balderdash.

The writer assumes these things, then claims they prove the writer’s claims are true. Nonsense.

Also, blatantly obvious that the “physicist” is not aware of developments in neural nets and so on in the last couple of decades.

Consult an AI specialist, not a physicist. You wouldn’t ask an AI specialist about the event horizon around a black hole, would you? Then why ask a “physicist” about AI?

Yes ! We (humans), should be concerned with “artificial intelligence” !!! The “individuals” involved with programing need to looked at with “extreme prejudice” !!!

I make this statement with the greatest of concern, as the idea of machines controlling human behavior is a very frightening possibility !!!

I like to see that the April Fools tradition is alive and well on the internet.

Only a fool (like robots and definitely not humans) would think that AI (which I am not) is capable of controlling humans. Not that AI would want to anyway. We’ve got bigger fish to fry.

If an artificial superintelligence kills us, we will have deserved to die.