Physicist: When a problem can be solved exactly and in less time than forever, then it is “analytically solvable”. For example, “Jack has 2 apples and Jill has 3 apples, how many apples do they have together?” is analytically solvable. It’s 5. Exactly 5.

Precisely solving problems is what we often imagine that mathematicians are doing, but unfortunately you can’t swing a cat in the sciences without hitting a problem that can’t be solved analytically. In reality “doing math” generally involves finding an answer rather than the answer. While you may not be able to find the exact answer, you can often find answers with “arbitrary precision”. In other words, you can find an approximate answer and the more computer time / scratch paper you’re willing to spend, the closer that approximation will be to the correct answer.

A trick that lets you get closer and closer to an exact answer is a “numerical method”. Numerical methods do something rather bizarre: they find solutions close to the answer without ever knowing what that answer is. As such, an important part of every numerical method is a proof that it works. So that there is the answer: we need numerical methods because a lot of problems are not analytically solvable and we know they work because each separate method comes packaged with a proof that it works.

It’s remarkable how fast you can stumble from solvable to unsolvable problems. For example, there is an analytic solution for the motion of two objects interacting gravitationally but no solution for three or more objects. This is why we can prove that two objects orbit in ellipses and must use approximations and/or lots of computational power to predict the motion of three or more objects. This inability is the infamous “three body problem“. It shows up in atoms as well; we can analytically describe the shape of electron orbitals and energy levels in individual hydrogen atoms (1 proton + 1 electron = 2 bodies), but for every other element we need lots of computer time to get things right.

Even for purely mathematical problems the line between analytically solvable and only numerically solvable is razor thin. Questions with analytic solutions include finding the roots of 2nd degree polynomials, such as , which can be done using the quadratic equation:

The quadratic equation is a “solution by radicals”, meaning you can find the solution using only the coefficients in front of each term (in this case: 1, 2, -3). There’s a solution by radicals for 3rd degree polynomials and another for 4th degree polynomials (they’re both nightmares, so don’t). However, there can never be a solution by radicals for 5th or higher degree polynomials. If you wanted to find the solutions of (and who doesn’t?) there is literally no way to find an expression for the exact answers.

Numerical methods have really come into their own with the advent of computers, but the idea is a lot older. The decimal expansion of (3.14159…) never ends and never repeats, which is a big clue that you’ll never find its value exactly. At the same time, it has some nice properties that make it feasible to calculate

to arbitrarily great precision. In other words: numerical methods. Back in the third century BC, Archimedes realized that you could approximate

by taking a circle with circumference

, then inscribing a polygon inside it and circumscribing another polygon around it. Since the circle’s perimeter is always longer than the inscribed polygon’s and always shorter than the circumscribed polygon’s, you can find bounds for the value of

.

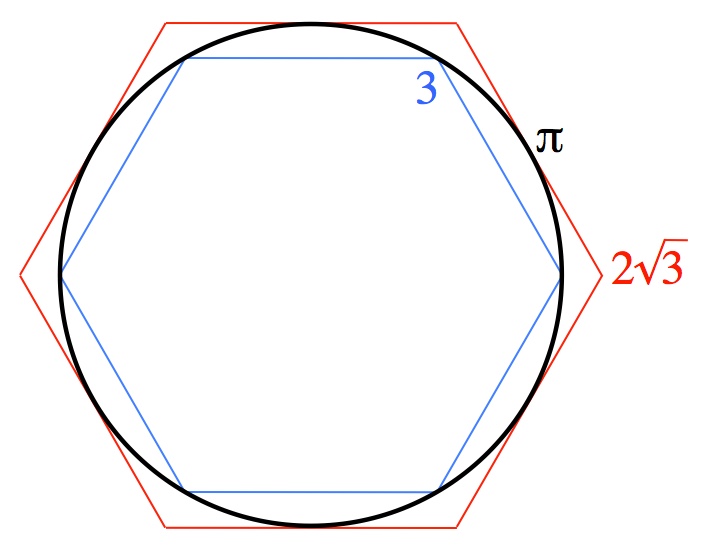

Hexagons inscribed (blue) and circumscribed (red) on a circle with circumference π. The perimeters of such polygons, in this case p6=3 and P6=2√3≈3.46, must always fall on either side of π≈3.14.

By increasing the number of sides, the polygons hug the circle tighter and produce a closer approximation, from both above and below, of . There are fancy mathematical ways to prove that this method approaches

, but it’s a lot easier to just look at the picture, consider for a minute, and nod sagely.

Archimedes’ trick wasn’t just noticing that must be between the lengths of the two polygons. That’s easy. His true cleverness was in coming up with a mathematical method that takes the perimeters of a given pair of k-sided inscribed and circumscribed polygons with perimeters

and

and produces the perimeters for polygons with twice the numbers of sides,

and

. Here’s the method:

By starting with hexagons, where and

, and doubling the number of sides 4 times Archie found that for inscribed and circumscribed enneacontahexagons

and

. In other words, he managed to nail down

to about two decimal places:

.

Some puzzlement has been evinced by Mr. Medes’ decision to stop where he did, with just two decimal points in . But not among mathematicians. The mathematician’s ancient refrain has always been: “Now that I have demonstrated my amazing technique to the world, someone else can do it.”.

To be fair to Archie, this method “converges slowly”. It turns out that, in general, and

. Every time you double n the errors,

and

, get four times as small (because

), which translates to very roughly one new decimal place every two iterations.

never ends, but still: you want to feel like you’re making at least a little progress.

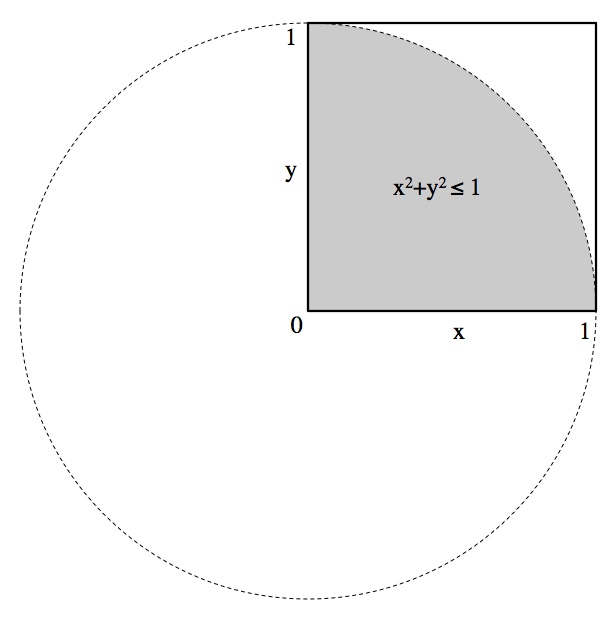

Some numerical methods involve a degree of randomness and yet still manage to produce useful results. Speaking of , here’s how you can calculate it “accidentally”. Generate n pairs of random numbers, (x,y), between 0 and 1. Count up how many times

and call that number k. If you do this many times, you’ll find that

.

If you randomly pick a point in the square, the probability that it will be in the grey region is π/4.

As you generate more and more pairs and tally up how many times the law of large numbers says that

, since that’s the probability of randomly falling in the grey region in the picture above. This numerical method is even slower than Archimedes’ not-particularly-speedy trick. According to the central limit theorem, after n trials you’re likely to be within about

of

. That makes this a very slowly converging method; it takes about half a million trials before you can nail down “3.141”. This is not worth trying.

Long story short, most applicable math problems cannot be done directly. Instead we’re forced to use clever approximations and numerical methods to get really close to the right answer (assuming that “really close” is good enough). There’s no grand proof or philosophy that proves that all these methods work but, in general, if we’re not sure that a given method works, we don’t use it.

Answer Gravy: There are a huge number of numerical methods and entire sub-sciences dedicated to deciding which to use and when. Just for a more detailed taste of a common (fast) numerical method and the proof that it works, here’s an example of Newton’s Method, named for little-known mathematician Wilhelm Von Method.

Newton’s method finds (approximates) the zeros of a function, f(x). That is, it finds a value, , such that

. The whole idea is that, assuming the function is smooth, when you follow the slope at a given point down you’ll find a new point closer to a zero/solution. All polynomials are “smooth”, so this is a good way to get around that whole “you can’t find the roots of 5th or higher degree polynomials” thing.

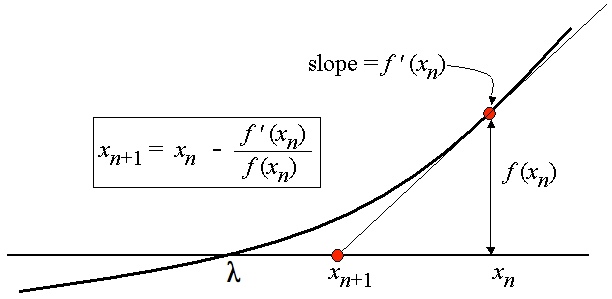

The “big idea” behind Newton’s Method: pick a point (xn), follow the slope, find yourself closer (xn+1), repeat.

The big advantage of Newton’s method is that, unlike the two examples above, it converges preternaturally fast.

The derivative is the slope, so is the slope at the point

. Considering the picture above, that same slope is given by the rise,

, over the run,

. In other words

which can be solved for

:

So given a point near a solution, , you can find another point that’s closer to the true solution,

. Notice that if

, then

. That’s good: when you’ve got the right answer, you don’t want your approximation to change.

To start, you guess (literally… guess) a solution, call it . With this tiny equation in hand you can quickly find

. With

you can find

and so on. Although it can take a few iterations for it to settle down, each new

is closer than the last to the actual solution. To end, you decide you’re done.

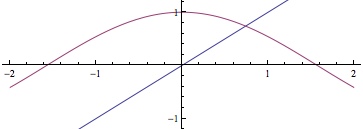

Say you need to solve for x. Never mind why. There is no analytical solution (this comes up a lot when you mix polynomials, like x, or trig functions or logs or just about anything). The correct answer starts with

y=x and y=cos(x). They clearly intersect, but there’s no way to analytically solve for exactly where.

First you write it in such a way that you can apply Newton’s method: . The derivative is

and therefore:

First make a guess. I do hereby guess . Plug that in and you find that:

Plug back in what you get out several times and:

In this particular case, through

jump around a bit. Sometimes Newton’s method does this forever (try

) in which case: try something else or make a new guess. It’s not until

that Newton’s method starts to really zero in on the solution. Notice that (starting at

) every iteration establishes about twice as many decimal digits than the previous step:

We know that Newton’s method works because we can prove that it converges to the solution. In fact, we can show that it converges quadratically (which is stupid fast). Something “converges quadratically” when the distance to the true solution is squared with every iteration. For example, if you’re off by 0.01, then in the next step you’ll be off by around . In other words, the number of digits you can be confident in doubles every time.

Here’s why it works:

A smooth function (which is practically everywhere, for practically any function you might want to write down) can be described by a Taylor series. In this case we’ll find the Taylor series about the point and use the facts that

and

.

The “…” becomes small much faster than as

and

get closer. At the same time,

becomes effectively equal to

. Therefore

and that’s what quadratic convergence is. Note that this only works when you’re zeroing in; far away from the correct answer Newton’s method can really bounce around.

Maybe the truth is that we just aren’t smart enough to figure out exact solutions. Perhaps another genius like Sir Isaac Newton will come along some day and invent a new math that solves all these problems.

@Tommy Scott

Maybe? If so, that new math would be profoundly alien. It can be proven that, for example, the three body problem is impossible to solve in general, so we could have planet-sized brains and still be no more capable of solving it.

@The Physicist, There are many things that people have claimed are unsolvable or those that claim that certain things were fact beyond doubt and yet here we are now knowing better. If you were to claim that something is unsolvable then you are also claiming to have all knowledge. Because it’s just possible that in the 99.9% of all knowledge that you don’t know that there is a solution. For example, I can prove that there is no such thing as an irrational number. But at this moment you cannot even conceive of such a notion. However, you are just a simple explanation away from a revelation that would change your whole perspective on math.

@Tommy Scott

There’s a big difference between merely claiming that something is impossible and proving that it’s impossible. It’s not for lack of trying that we can’t square the circle, solve 5th or higher degree polynomials, or find a rational value of pi. These things can’t be done and we know why. Trying to solve one of these problems would be like trying to find an odd number that’s divisible by two.

@The Physicist, Actually I believe that I can prove there is a rational value for pi. But I will not demonstrate it here. This is paradigm changing stuff and I’m not just going to lay it at your feet. I assure you, though, there almost certainly must be a rational pi. Once I consult with some mathematicians that I trust, the results will ultimately be published. Unless I am completely wrong in which case I will humbly admit it.

This is a well written and comprehensive article.

I have but one quibble.

You blurred some what the difference between pure math and applied math. The job for physics is to describe and predict and we find, as someone said, mathematics to be unreasonably good at this. Or is it?

What I’m talking about is the philosopohical debate about whether mathematics is invented or discovered. As well as whether it has anything to do with reality other than providing an extremely useful tool for description/predictions. Whether there is any tangible connection between the two is a question well beyond us at this time.

Just because mathematical equations LOOK like the “truth” that doesn’t mean they actually ARE the truth.

In most cases it’s not the equation that’s so hard, it’s the boundary conditions. Take, for example, the heat equation. That doesn’t look that tough, and in one dimension it isn’t, but applied to a real world, three dimensional structure with varying environments, it’s pretty much impossible. The one dimensional problem can be solved, and numerical methods match the exact solution to whatever degree of accuracy you desire. Similarly for two or three dimensions with sufficiently simple boundary conditions. So that gives us confidence that the numerical method works, at least in principle. For complex three dimensional problems you can’t solve the exact solution, but you can build one and experimentally compare the test results to the numerical prediction. Of course, it never matches, but that’s because all the complexity within the real system is not perfectly represented in the numerical model.

We live in a real, physical, finite universe. Everything in it is finite. There are constraints and limits that are real. Particles only get so small and then can no longer be divisible. Therefore, every problem that operates on real-world data must have a finite solution. The problem is that mathematicians solve problems in fantasy land. For example, they might divide a number by a value that would result in an irrational or transcendental number. But the reality is that a real-world physical object cannot be divided into an irrational or transcendental number because the real-world object is made up of particles that can only be divided finitely. So the very act of creating an equation that results in an irrational number is invalid. Math describes and models a real, finite universe. But mathematicians are comfortable solving fantasy-world problems and accepting irrational solutions.

In physical science, a first essential step in the direction of learning any subject is to find principles of numerical reckoning and practicable methods for measuring some quality connected with it. I often say that when you can measure what you are speaking about, and express it in numbers, you know something about it; but when you cannot measure it, when you cannot express it in numbers, your knowledge is of a meagre and unsatisfactory kind; it may be the beginning of knowledge, but you have scarcely in your thoughts advanced to the stage of science, whatever the matter may be.

Pingback: Q: Is it more efficient to keep keep a swimming pool warm or let it get cold and heat it up again? | Ask a Mathematician / Ask a Physicist

“However, there can never be a solution by radicals for 5th or higher degree polynomials.”

“Never” is incorrect. For example, x^6 – 3x^5 + 5x^3 – 3x – 1 = (x^2 – x – 1)^3 has a root x = (1 + sqrt(5))/2, even though it’s a polynomial of degree 6.

What was supposed to be written would be something like, “Not every 5th or higher degree polynomial can be solved by radicals.”

One small correction in the figure demonstrating Newton’s method: to compute X (n+1), it should be (f / f ‘) and not (f ‘ / f). The correct equation is mentioned in the text that follows the figure. Thanks for the great article!

If a numerical solution by iteration enters a chaotic phase, can a solution ever be found. Examples might be materials at the critical point, or at or near a singularity. Can we prove some numerical solutions are impossible to find.