Physicist: First, don’t gamble unless you can be sure you won’t get caught cheating or you enjoy losing money.

Games of chance come in two flavors: “completely random” and “not quite completely random”. It’s not always obvious which is which, and it often barely matters. A good way to tell the difference is to imagine showing the game as it presently is to Leonard Shelby (that guy who can’t form new memories from Memento). If after extensive investigation he always has the same advice (“I don’t know, bet on red?”), then the game is memoryless. “Memoryless” is a genuine fancy math term, and refers to systems where the future results are unaffected by the past results.

Leonard Shelby from Memento. If a game resets and doesn’t “remember” anything, then there’s no overall pattern, and no way to “outsmart” it. For these games Leonard is on an equal footing with everyone else.

Say there are some folk playing a really simple game called “guess the number”. You guess a number, roll a die, and if you guessed right you win. For all its pomp and glitter, this is essentially what gambling is. Don’t gamble.

Now say that a few rounds have already been played, and on the fourth round a 3 is rolled. Lenny would experience that fourth round differently than most other people.

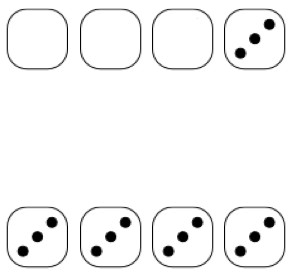

The same series of rounds as seen by someone without memory (top) and as seen by someone with memory (bottom).

Lenny sees a 3 and moves on with his life. He knows that a 3 is as likely as any other number, so he isn’t surprised. It’s only those of us burdened with memory who see “patterns” in these random numbers (fun fact: this is called “apophenia“). Someone who had seen the first rounds churn out a string of 3’s might think that the fourth round will be less likely or more likely to be a 3. However, assuming that the dice are fair, it turns out that Lenny’s intuition is better than ours; the roll of each of the dice is completely independent all of the other rolls.

The chance of getting these four 3’s in a row is . That’s clearly pretty unlikely, but it’s exactly as unlikely as every other possible combination. “1, 2, 3, 4” or “2, 6, 5, 5” or whatever else all show up with the same probability. There are some subtleties in combinatorics, but as long as you keep track of the order it’s fairly straightforward. “3, 3, 3, 3” is definitely unlikely, but so is every every other possibility. If the lottery pulled the same number, or a string of consecutive numbers, or some other obvious pattern, it would be surprising but it would be no more or less likely than any other sequence of numbers. That said, if it keeps happening, then you may want to explore why. For example, there may be trickery involved.

What we expect to see is what fancy math folk call a “typical sequence“; big jumbles of numbers with no discernible rhyme or reason. Every string of (fair) rolled dice is equally likely and while randomly emerging “patterns” will occasionally show up, they don’t change the math and can’t be predicted. Of course, they do make for better stories.

Games like craps or roulette are memoryless, which means that notions like “hot tables” and “runs” are completely baseless. On the other hand, games like blackjack are not quite memoryless. Since the cards are pulled from the same shoe if you sit and watch the cards for long enough you can predict which cards will be drawn next slightly better than someone who hasn’t.

Lotteries are also memoryless. So, assuming the lottery is fair, the only way you can increase your probability of winning is to buy more tickets (but please don’t). Number order and choice make no difference whatsoever. Unfortunately, assuming that the lottery is fair is a big assumption, that isn’t necessarily true. Keep in mind that lotteries, like all organized gambling institutions, are not created so that someone will win, they’re created so that everyone will lose.

If you want to win a lottery, far and away the best way to do it is to set one up yourself (which is illegal almost everywhere there are laws). Not to put too fine a point on it, but people who run big lotteries and casinos are massive ——-s. Gambling is seriously bad news, pretty much across the board (the owners do well).

There are much better ways to throw away money than playing the lottery. Before you think about giving away no-strings-attached money to people who don’t need it, consider trying: “cashfetti” cannons, recreating that scene from Indecent Proposal, money origami, lighting cigars, lining animal pens, breaking chopsticks, and eating it to gain it’s power.

A lot of folk have written in asking for mathematically-based gambling advice and, details aside, here it is: Don’t. The only way to win is not to play.