Physicist: This is a difficult question to even ask, because the word “exist” carries with it some “time-based assumptions”. For example, if you ask “does the Colossus of Rhodes exist?” the correct answer should be “it did, but it doesn’t now.

The problem with the way the word “exists” is used is that it implies “now”. So, in that sense: no, the past and future don’t exist (by definition). But big issues start coming into play when you consider that in relativity (which has given us a much more solid and nuanced understanding of time and space) what “now” is depends on how you’re physically moving. There’s a post here that goes into exactly why.

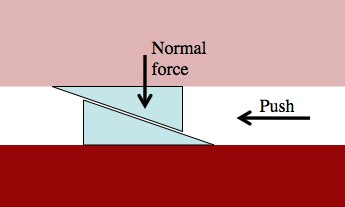

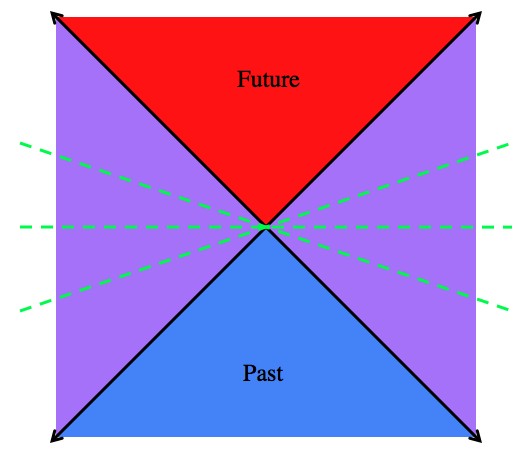

“Here and now” is the center of this picture. Everything in the bottom blue triangle is definitely in the past, and everything in the top red triangle is definitely in the future. But things in the purple triangles can be either in the past or future or present, depending on how fast you’re moving. The dashed lines are examples of different “nows”. In this diagram time points up and space points left/right.

Here’s what’s interesting with that: if we can say that the present and all those things that are happening now exist (regardless of who’s “now” we’re using), then we can show that the past and future exist in the exact same sense.

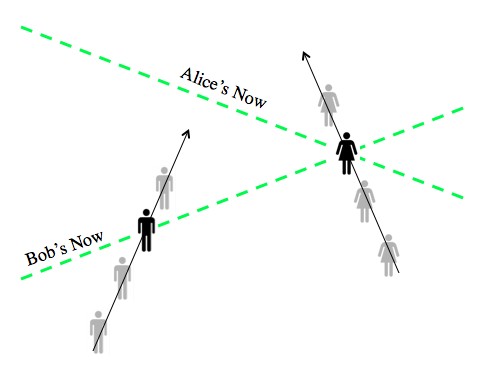

By moving fast, and in different directions, Alice and Bob have different “nows”. In this diagram Bob’s now includes Alice at some particular moment, but for Alice that moment happens at the same time (same “now”) as a time in Bob’s future. Like in the last diagram, time is up and space is left/right.

Again, if we define things that are can be found “right now” as existing, and we don’t care whose notion of “right now”we use, then the future and past exist in exactly the same way that the present exists.

It seems as though what’s going on in the present is somehow important and “more real” than what happened in the past. But consider this; we never interact with other things in the present. Because no effect can travel faster than light the best we can hope for is to interact with the recent past of other things. For example, since light travels about 1 foot per nanosecond, the screen you’re seeing now is really the screen as it was a nanosecond or two ago. Hard to notice. In relativity everything (all the laws, cause and effect, that sort of thing) is “local”, which means that the only thing that matters to what’s happening here and now is everything in the “past light cone” of here and now. That’s the blue bottom triangle of the top picture.

What’s happening now in other places is totally disconnected. For example, Alpha Centauri is about 4 light years away, and while things are certainly happening there “right now”, it won’t matter to us at all for another four years. Even though those events are happening now, they’re exactly as indeterminate and hard to guess as the things that will happen in the future. The point is that “now” does extend throughout the universe, but that doesn’t physically mean anything, or have an actual effect on anything.

So if things in the past and future exist in the exact same way that things in the present exist, then doesn’t that mean that they’re fixed? If my future is the past for someone else who’s around right now (and necessarily moving very fast like in the last diagram), then does that somehow determine the future? The answer to that question is: it doesn’t matter, but for two interesting reasons.

First, if you consider someone else who’s around “now”, then they’re not in your past light cone and they’re not in your’s (in the top diagram the “nows” are always in the purple regions). That means that, for example, some of Bob’s future will be in Alice’s past, but neither of them can know what that future holds until they wait a while. Bob has to wait until he’s “in the future”, and Alice has to wait for the signal delay before she can know anything. Either way, the events of Bob’s future are unknowable regardless of who (in Bob’s present) is asking. The future is a lock and the only key is patience.

Answer gravy: The second reason it doesn’t matter if the future exists involves a quick jump into quantum mechanics (and arguably should have involved a jump into a new, separate post). There could be an issue with the future existing (and thus being predetermined) because that flies in the face of quantum randomness which basically says (and this is glossing over a lot) that the result of an experiment doesn’t exist until after that experiment is done. This is embodied by Bell’s theorem, which is a little difficult to grasp. So Schrödinger’s Cat is both alive and dead until its box is opened. But if, in the future, the box has already been opened and the Cat is found to be alive, then the Cat was always alive. Things like superposition and all of the usual awesomeness of quantum mechanics go away.

But, before you stress out and start researching to try to really understand the problem in that last paragraph: don’t. Turns out there isn’t an issue. Even if the future does exist, it doesn’t mean that events are set in stone in any useful or important way. In the (poorly named) Many Worlds interpretation of quantum mechanics, every thing that can happen does, and those many ways for things to happen are described by a (fantastically complicated) quantum wave function. That wave function is set in stone by an extant future, but that doesn’t tell you exactly what will happen. In the case of Schrödinger’s Cat, the Cat is in a super-position of both alive and dead before the box is opened, and afterward it’s still alive and dead but the observer is “caught up” in the super-position.

The super-position of states after the box is opened: Schrödinger sad about his dead cat and Schrödinger happy about his still living cat.

Before the box is opened we can say that, in the future, we will definitely be in a particular super-position of both happy (because of the cute living cat) and horrified (because of the gross dead cat). However, that doesn’t actually predict which result you’ll experience. Technically you’ll experience both.

is defined as the “value” such that given any number, x, we always have x <

is defined as the “value” such that given any number, x, we always have x <  (the reduced Planck constant), and kB (Boltzmann constant) are all equal to 1. So for example, “E=mc2” becomes “E=m” (again, this doesn’t change things any more than, say, switching between miles and kilometers does).

(the reduced Planck constant), and kB (Boltzmann constant) are all equal to 1. So for example, “E=mc2” becomes “E=m” (again, this doesn’t change things any more than, say, switching between miles and kilometers does).