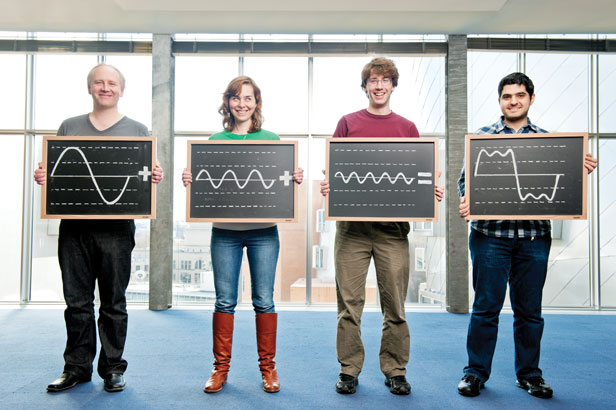

Physicist: Almost every imaginable signal can be broken down into a combination of simple waves. This fact is the central philosophy behind Fourier transforms (Fourier was very French, so his name is pronounced a little wonky: “4 E yay”).

A complicated signal can be broken down into simple waves. This break down, and how much of each wave is needed, is the Fourier Transform.

Fourier transforms (FT) take a signal and express it in terms of the frequencies of the waves that make up that signal. Sound is probably the easiest thing to think about when talking about Fourier transforms. If you could see sound, it would look like air molecules bouncing back and forth very quickly. But oddly enough, when you hear sound you’re not perceiving the air moving back and forth, instead you experience sound in terms of its frequencies. For example, when somebody plays middle C on a piano, you don’t feel your ear being buffeted 261 times a second (the frequency of middle C), you just hear a single tone. The buffeting movement of the air is the signal, and the tone is the Fourier transform of that signal.

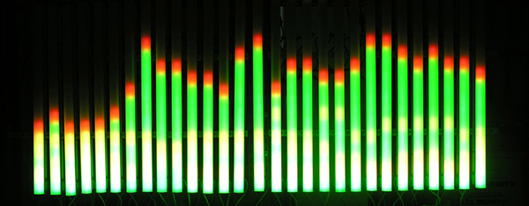

The layout of the keys of a piano (bottom) are like the Fourier transform of the sound the piano makes (top).

The Fourier transform of a sound wave is such a natural way to think about it, that it’s kinda difficult to think about it in any other way. When you imagine a sound or play an instrument it’s much easier to consider the tone of the sound than the actual movement of the air.

In fact, when sound is recorded digitally the strength of the sound wave itself can be recorded (this is what a “.wav” file is), but more often these days the Fourier transform is recorded instead. At every moment a list of the strengths of the various frequencies is “written down” (like in the picture above). This is more or less what an mp3 is (with lots of other tricks). It’s not until a speaker has to physically play the sound that the FT is turned back into a regular sound signal.

Older analog recording techniques, like this vinyl record, record the original sound signal and not the FT.

In the form of an FT it’s easy to filter sound. For example, when you adjust the equalizer on your sound system, like when changing the bass or treble, what you’re really doing is telling the device to multiply the different frequencies by different amounts before sending the signal to the speakers. So when the base is turned up the lower frequencies get multiplied by a bigger value than the higher frequencies.

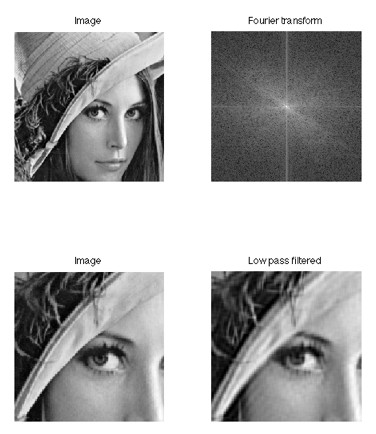

However, acoustics are just the simplest application of FT’s. An image is another kind of signal, but unlike sound an image is a “two dimensional” signal. A different kind of FT can be still found, and it is likewise two dimensional. When this was first done on computers it was found that, for pretty much any picture that isn’t random static, most of the FT is concentrated around the lower frequencies. In a nutshell, this is because most pictures don’t change quickly over small distances (something like “Where’s Waldo?” would be an exception), so the higher frequencies aren’t as important. This is the basic idea behind “.jpeg” encoding and compression (although there are other clever tricks involved).

An image and its Fourier transform. Notice that most of the FT is concentrated in the center (low frequencies). Deleting the FT away from the center saves a lot of data, and doesn’t do too much damage to the image. This is called a “low-pass filter”.

Interesting fun fact: the image of the woman in the hat is called “Lenna” and it’s one of the most commonly used standards in image-processing tests. It’s the top half of a adult image, and while it may seem strange that computer scientists would use that sort of image, the argument can be made that most comp-sci students don’t have too much experience finding any other kind.

While digital technology has ushered in an explosion of uses for Fourier transforms, it’s a long way from being the only use. In both math and physics you find that FT’s are floating around behind the scenes in freaking everything. Any time waves are involved in something (which is often), you can be sure that Fourier transforms won’t be far behind. It’s commonly easy to describe something with a single, simple wave, like pendulums or a single bouncing ball. Often (but certainly not always) it’s possible to break down complex systems into simple waves (or to approximately do this), then to look at how those waves behave individually, and then to reconstruct the behavior of the system as a whole. Basically, it’s easy to deal with “sin(x)” but difficult to deal with a completely unknown function “f(x)”.

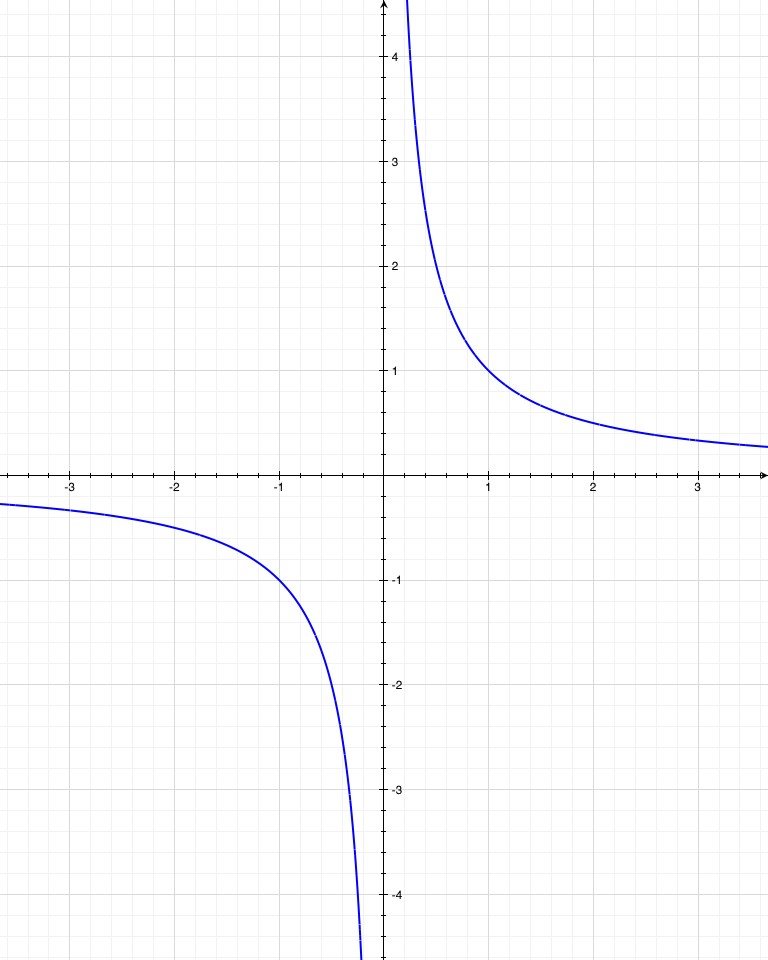

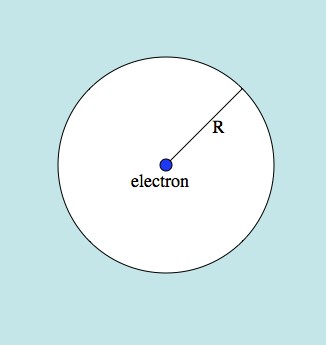

Physicists jump between talking about functions and their Fourier transforms so often that they barely see the difference. For example, for not-terribly-obvious reasons, in quantum mechanics the Fourier transform of the position a particle (or anything really) is the momentum of that particle. Literally, when something has a lot of momentum and energy its wave has a high frequency, and waves back and forth a lot. Applying Fourier stuff to quantum mechanics is one of the most direct ways to derive the infamous Heisenberg Uncertainty principle! FT’s even show up in quantum computers, as described in this shoddily written article.

Mathematicians tend to be more excited by the abstract mathematical properties of Fourier transforms than by the more intuitive properties. A lot of problems that are difficult/nearly impossible to solve directly become easy after a Fourier transform. Mathematical operations on functions, like derivatives or convolutions, become much more manageable on the far side of a Fourier transform (although, more often, taking the FT just makes everything worse).

Answer gravy: Fourier transforms are of course profoundly mathematical. If you have a function, f, that repeats itself every 2π, then you can express it as a sum of sine and cosine waves like this: .

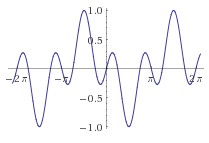

It turns out that those A’s and B’s are fairly easy to find. Sines and cosines have a property called “orthogonality”.

f(x) = sin(x)cos(2x). The orthogonality of sines and cosines is a statement about the fact that mixing sines and cosines of different frequencies creates functions that are positive exactly as often as they are negative (zero on average).

Now, say you want to find out what B3 is (for example). Just multiply both sides by cos(3x), and integrate from 0 to 2π.

You can do this for all of those A’s and B’s, so and

.

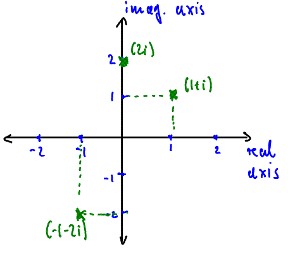

Taking advantage of Euler’s equation, eiθ = cos(θ) + i sin(θ), you can compress this into one equation: ,

. There are some important details behind this next bit, but if you expand the size of the interval from [0, 2π] to (-∞, ∞) you get:

and

Here, instead of Cn, you have

and instead of a summation you have an integral, but the essential idea is the same. Here,

is the honest-to-god actual Fourier transform of f.

Now here’s a big part of why mathematicians love Fourier transforms so much that they want to have billions of their babies, naming them in turn, “baby sub naught, baby sub one, through baby sub n”.

If you’re looking at a differential equation, then you can solve many of them fairly quickly using FT’s. Derivatives become multiplication by a variable when passed through a FT. Here’s how:

Suddenly, your differential equation becomes a polynomial! This may seem a bit terse, but to cover Fourier transforms with any kind of generality is a losing battle: it’s a huge subject with endless applications, many of which are pretty complicated. Math’s a big field after all.

The record groove picture is from here.

The Lenna pictures are from here.

The top picture with the four scientists is from here.