Physicist: This is a very common question that’s generated (as best I can tell) by a misrepresentation of the Big Bang that you’ll often see repeated in popular media. In the same documentary you may hear statements along the lines of:

“our telescopes can see the light from the earliest moments of the universe” and

“in the Big Bang, all of the energy and matter in the universe suddenly exploded out of a point smaller than the head of a pin”.

The first statement is pretty solid. Technically, the oldest light we can see is from about 300,000 years after the Big Bang, but we can use it to infer some interesting things about what was happening within the first second (which is the next best thing to actually being able to see the first second). That light is now the Microwave Background Radiation.

The second statement has a mess of holes in it.

First, when someone says that all of the matter and energy in the universe was in a region smaller than the head of a pin, what they’re actually talking about is the observable universe, which is just all of the galaxies and whatnot that we can see. If you’re standing on the sidewalk somewhere you can talk meaningfully about the “observable Earth” (everything you can see around you), but it’s important to keep in mind that there’s very little you can say about the size and nature of the entire Earth from one tiny corner of it.

The second statement also implies that there’s time and space independent of the universe. Phrases like “suddenly exploded out of a point” makes it sound like you could have been floating around, biding your time playing solitaire and checking email in a vast void, and then Boom! (pardon; “Bang!”) a whole lot of stuff suddenly appears nearby and expands. If the Big Bang and the expansion of the universe were as straightforward as an explosion and things flying away from that explosion, then the earliest light would be on the front of our ever-expanding universe. If that were the case (and it seems to be from the images and videos presented in, like, every documentary evar), then there’s no way you’d be able to see the light from the early universe.

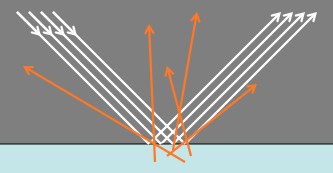

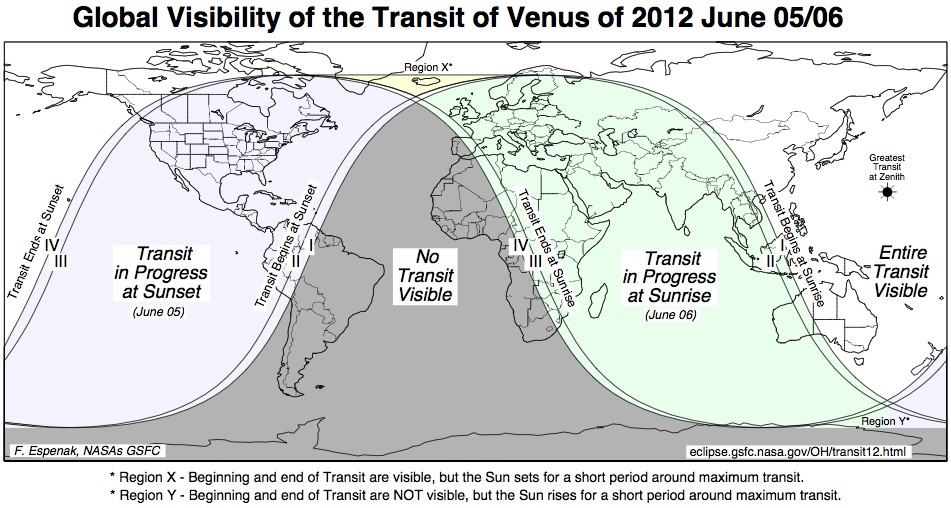

The incorrect misrepresentation often shown (implied) in many science shows and books: the Big Bang as an explosion that happens at some point, and all of the resulting light (blue ring) and matter (stuff in the ring) in the universe spreading out from that point (red star). However, this means that the light from the early universe would be long gone, and we would have no way to see it.

Just to be doubly clear, the idea of the universe exploding out of one particular place, and then all of the matter flying apart into some kind of pre-existing space, is not what’s actually going on. It’s just that getting art directors to be accurate in a science documentary is about as difficult as getting penguins to walk with decorum.

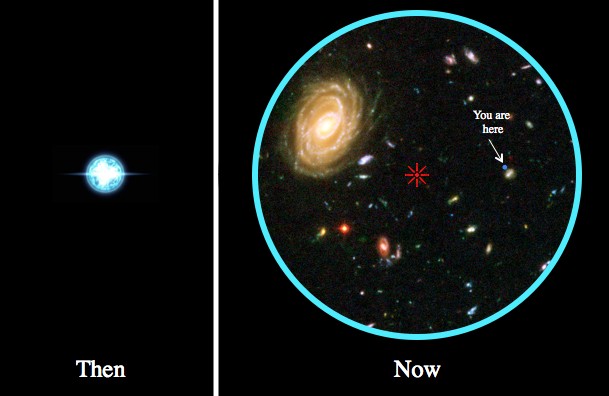

The view of the universe that physicists work with today involves space itself expanding, as opposed to things in space flying apart. Think of the universe as an infinite rubber sheet*. The early universe was very dense, and very hot, what with things being crammed together. Hot things make lots of light, and the early universe was extremely hot everywhere, so there would have been plenty of light everywhere, shooting in every direction.

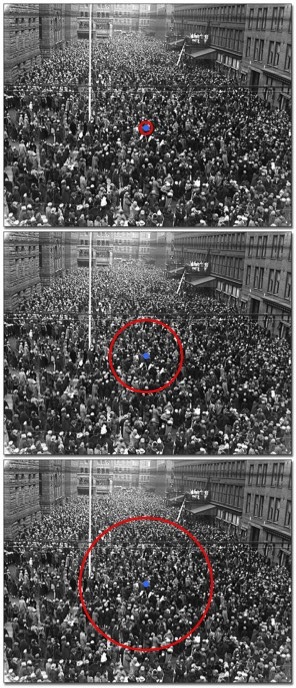

If you start with light everywhere, you’ll continue to have it everywhere. The only thing that changes with time is how old the light you see is, and how far it’s traveled. The expansion of the universe is independent of that. Imagine standing in a huge (infinite) crowd of people. If everyone yelled “woo!” (or something equally pithy) all at once, you wouldn’t hear it all at once, you’d hear it forever, from progressively farther and farther away.

Everyone in a crowd yells “woo!” at the same time. As time marches forward you (blue dot) will continue to hear the sound, but the sound you’re hearing is older and from farther away (red line). Light from the early universe works the same way.

As the universe expands (as the rubber sheet is stretched) everything cools off, and the universe becomes clear, as everything is given a chance to move apart. That same light is still around, it’s still everywhere, and it’s still shooting in every direction. Wait a few billion years (14 or so), and you’ve got galaxies, sweaters for dogs, hip-hop music; a thoroughly modern universe. That old light will still be everywhere, shooting in every direction. Certainly, there’s a little less because it’s constantly running into things, but the universe is, to a reasonable approximation, empty. So most of the light is still around.

The expansion of the universe does have some important effects, of course. The light that we see today as the cosmic microwave background started out as gamma rays, being radiated from the omnipresent, ultra-hot gases of the young universe, but they got stretch out, along with the space they’ve been moving through. The longer the wavelength, the lower the energy. The background energy is now so low that you can be exposed to the sky without being killed instantly. In fact, the night sky today radiates energy at the same intensity as anything chilled to about -270 °C (That’s why it’s cold at night! Mystery solved!). Even more exciting, the expansion means that the sources of the light we see today are now farther away than they were when the light was emitted. So, while the oldest light is only about 14 billion years old (and has traveled only 14 billion light years), the location from which it was emitted can be calculated to be about 46 billion light years away right now!

Isn’t that weird?

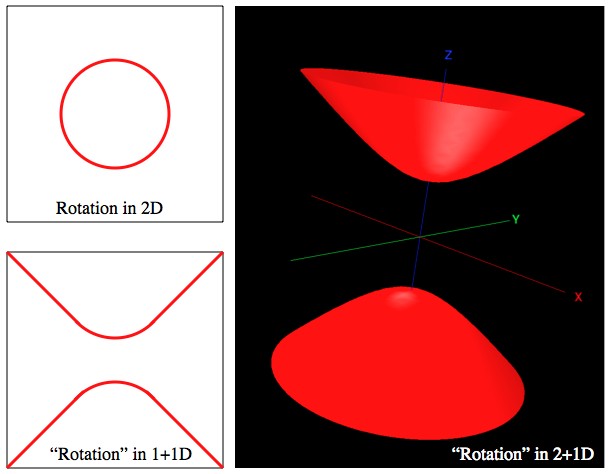

*The universe may be “closed”, in which case it’s curved and finite (like the surface of a balloon), or “open”, in which case it’s flat and infinite in all directions (like an infinite rubber sheet). “Curved” and “flat” are a little hard to picture when you’re talking about 3 dimensional space, but there’s a little help here. So far, all indications are that the universe is flat, so it’s either infinite or so big that the curvature can’t be detected by our equipment (kinda like how the curvature of the Earth can’t be detected by just looking around, because the Earth is so big). In either case, the expansion works the same way. However, in the open case it’s a touch more difficult to picture how the Big Bang worked. The universe would have started infinite, and then gotten bigger. The math behind that is pretty easy to deal with, but it’s still harder to imagine.