Physicist: It’s amazing that we’ve found any at all, considering the difficulties involved.

The scale of things in space is ridiculous. The closest discovered planet to us, outside of our solar system, is “Epsilon Eridani b“, a mere stone’s throw away at 10.4 light years. If the Sun were about the size of a baseball, and for whatever reason was being kept in New York, then the star Epsilon Eridani would be about as far away as Paris (overland distance), the orbit of the planet Epsilon Eridani b would be a ring about 30 meters across, and the planet itself would probably be about the size of a pea. “Probably” because while taking a picture of something substantially smaller than a bread box from 5,800 km (3,600 miles) away seems like it should be easy, astronomers still don’t have a way to do it, despite the extra time they have while all their friends are on dates.

Even contending with the fantastic distances, the fact that we’re looking for tiny dim things right next to gigantic bright things, and the fact that when you look at another solar system all you can see (even with the most powerful telescopes) is a single pixel of light, we’ve still managed to find over 760 planets around other stars (almost all in the last decade), up to as far as 27,700 light years away (a quarter of the way across the galaxy).

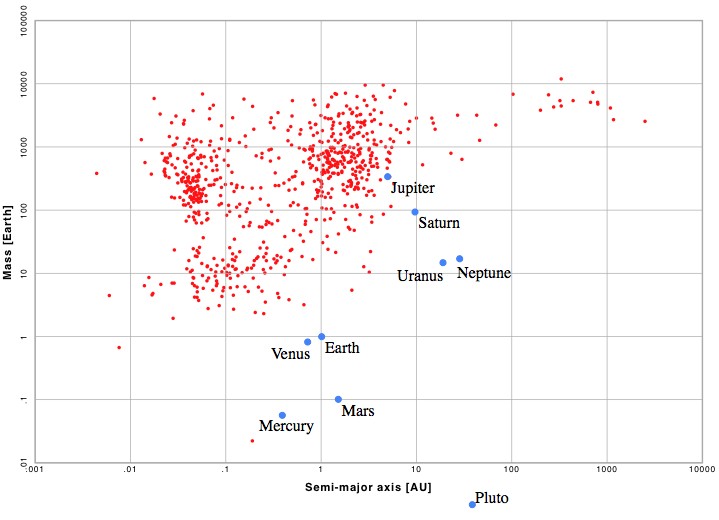

In our solar system we’ve found a mess of new planets (well, “dwarf planets” anyway) by taking pictures of the sky and literally looking for them. However, to find extra-solar planets (“exoplanets”) we’ve had to rely on methods that are decent at finding planets that are huge and close to their suns, and bad at finding planets that are small and far from their suns. That right there is the essential reason why we haven’t found any truly Earth-like planets yet: a planet like the Earth is too small and far from the Sun to be readily detectable.

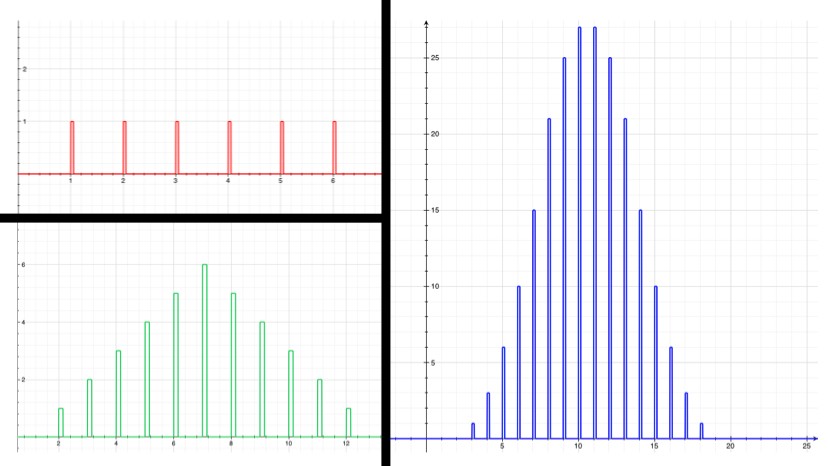

The orbital distances vs. to mass of the 767 exoplanets found as of May 2012. For comparison, the planets of our solar system are included. An alien civilization using techniques similar to ours might be able to see Jupiter, but not much more.

The most common techniques for finding exoplanets today are the “radial velocity” technique, and the “transit” technique.

An orbiting planet is whipped around in a huge circle by its host star, but at the same time the star itself is being moved in a tiny circle by its planets. The effect is pretty small, but it does have some pretty interesting consequences. In our solar system, there are two clusters of asteroids that share Jupiter’s orbit. In a simple orbital system (with no “Sun wobble”) something the size of a planet quickly eats up everything that shares its orbit. However, because Jupiter is heavy enough to literally make the Sun wobble, the situation is a bit more complicated, and the strange Jupiter-Sun-wobbliness dynamics lead to “Lagrange points“, where asteroids can orbits stably forever (it may be the case that all the planets have at least a few of these kinds of asteroids stuck in their orbits, but Jupiter’s are the easiest to find). For astronomers however, the more important effect is that the physical movement of the star means that there is a slight (really slight) Doppler shift as the star moves forward and backward from the perspective of telescope on and around Earth. When the star wobbles toward us it appears bluer, and when it wobbles away it appears redder. For comparison, Jupiter causes the Sun to move back and forth at about 45 kph (28 mph). 45 kph is a hell of a lot slower than the speed of light, and as a result the reddening and bluing of the Sun by Jupiter is tiny.

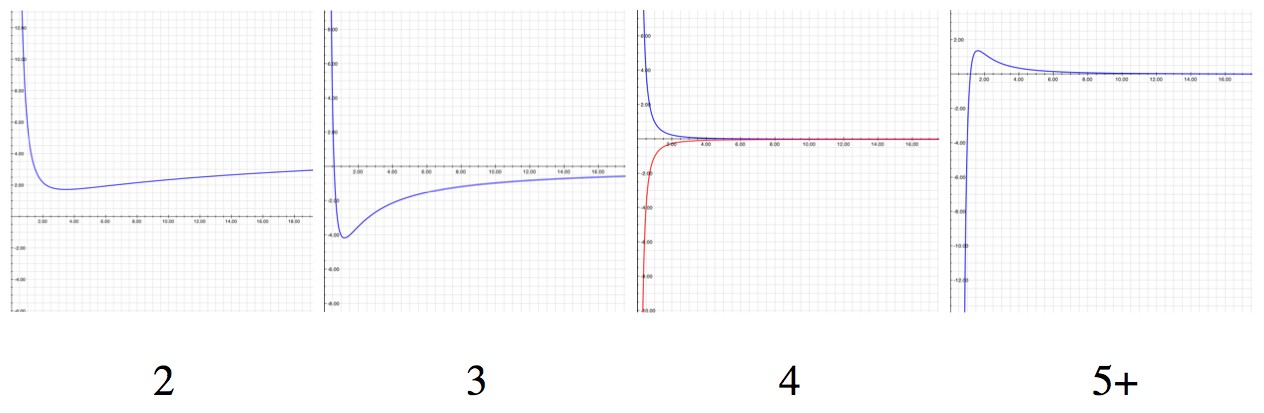

In order to see this tiny Doppler effect it helps a lot to have a strong wobble, caused by a big planet orbiting quickly. Star’s come in a wide range of different colors, so if it takes years for the star to wobble back and forth, it’s easy to assume that a star is a little extra blue or red because it’s hotter or colder instead of because of it’s movement. But, if a star changes back and forth every few days, in a very regular cycle, then what you’ve probably found is a close-orbiting exoplanet.

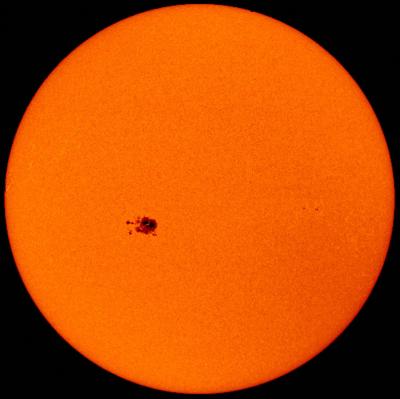

The transit technique on the other hand is a little more forgiving. The idea is that you stare at a star and wait for an eclipse. When an exoplanet passes between us and its host star it causes the star to dim a little. An alien civilization using this technique would see the Sun dim by about 0.0086% when the Earth was in front of it. Unfortunately, the Sun and stars like it naturally change intensity by about 0.1% all the time, due to sunspots and other vagaries of “solar weather”, which completely drowns out the influence of Earth-sized planets.

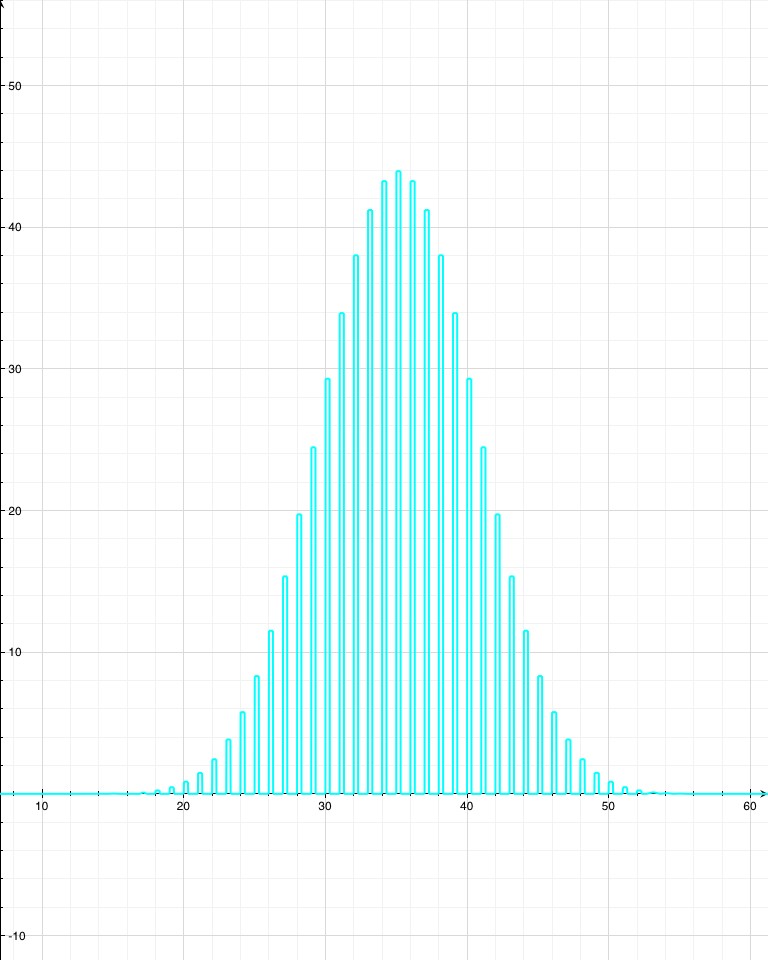

You can get around this using the fact that while the variation of a star’s intensity is mostly random, the variation due to a planet passing in front of it is extremely regular. After watching for long enough you can detect the minute, repeated, dimming due to planets. That said, what you’d really like is a big planet that can block out more light and make the dimming more pronounced, that also has a short orbit so that you can see it eclipse many times, which makes it a lot easier to tell the difference between natural variation and planet-caused variation.

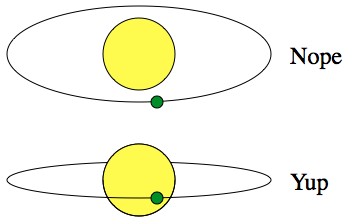

Unfortunately, one of the draw-backs of the transit technique is that, at best, you’ll only spot less than 1% of the planets. If it doesn’t eclipse, you’ll never see it.

With respect to the Earth, the orbits of exoplanets are random. Sometimes they line up so that we can see them transit, but usually not.

Finding an Earth-like planet will require a lot of patience and more powerful telescopes and telescope arrays, but that’s just an engineering problem. It’s just a matter of time (and probably not even that much!). Once found, the next challenge will be to determine whether or not the newly found tiny-as-Earth planets are covered in plants and critters. Already there are some ideas being floated that involve analyzing the atmosphere of the exoplanet to find signs of life (specifically, water vapor and oxygen). As impossible as that sounds, considering that we can’t actually take pictures of exoplanets (and again, every time you look at a star you can’t do better than a point of light) we’ve actually managed to do it a few times! Unfortunately, the first atmospheres we’ve seen were only visible because they were forming huge “comet tails” by being blown off of the surface of “hot-Jupiters” orbiting practically within high-fiving distance of their host stars. So, not Earth-like, but it is a step in the right direction.

Chances are, we’ll find an Earth-sized planet inside of the goldilocks zone of another solar system, and be able to determine if there’s life on it or not, within the next few decades. Copernicus would crap himself with joy if he were alive today.