The original question was: I’ve heard somewhere that they’re also trying to build computers using molecules, like DNA. In general would it work to try and simulate a factoring algorithm using real world things, and then let the physics of the interactions stand-in for the computer calculation? Since the real-world does all kinds of crazy calculations in no time.

Physicist: The amount of time that goes into, say, calculating how two electrons bounce off of each other is very humbling. It’s terribly frustrating that the universe has no hang ups about doing it so fast.

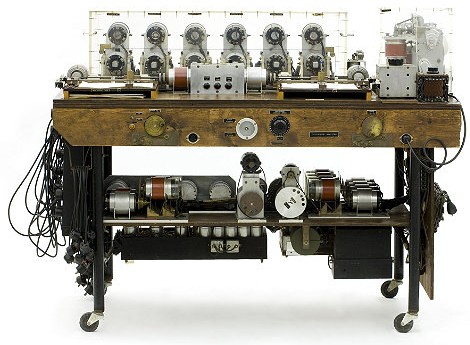

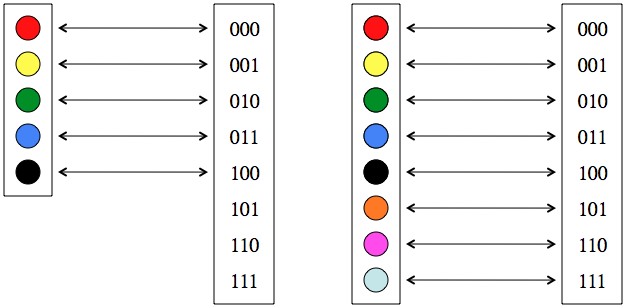

In some sense, basic physical laws are the basis of how all calculations are done. It’s just that modern computers are “Turing machines“, a very small set of all the possible kinds of computational devices. Basically, if your calculating machine consists of the manipulation of symbols (which in turn can always be reduced to the manipulation of 1’s and 0’s), then you’re talking about Turing machines. In the earlier epoch of computer science there was a strong case for analog computers over digital computers. Analog computers use the properties of circuit elements like capacitors, inductors, or even just the layout of wiring, to do mathematical operations. In their heyday they were faster than digital computers. However, they’re difficult to design, not nearly as versatile, and they’re no longer faster.

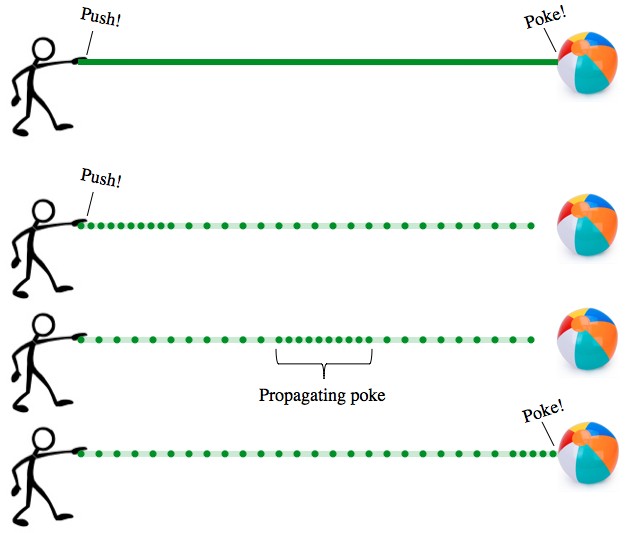

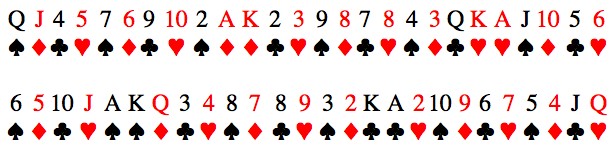

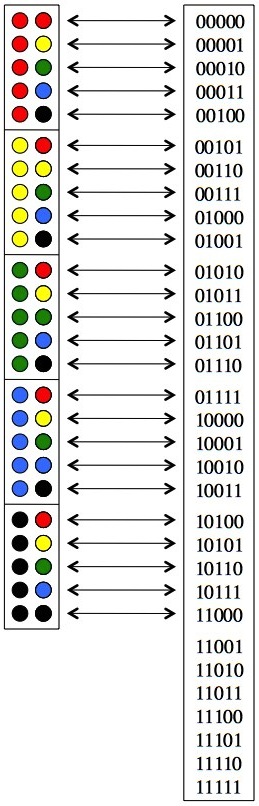

Any physical phenomena that represents information in definite, discrete states is doing the same thing a digital computer does, it’s just a question of speed. To see other kinds of computation it’s necessary to move into non-digital kinds of information. One beautiful example is the gravity powered square root finder.

Newtonian physics used to find the square root of numbers. Put a marble next to a number, N, (white dots) on the slope, and the marble will land on the ground at a distance proportional to √N.

When you put a marble on a ramp the horizontal distance it will travel before hitting the ground is proportional to the square root of how far up the ramp it started. Another mechanical calculator, the planimeter, can find the area of any shape just by tracing along the edge. Admittedly, a computer could do both calculations a heck of a lot faster, but they’re still descent enough examples.

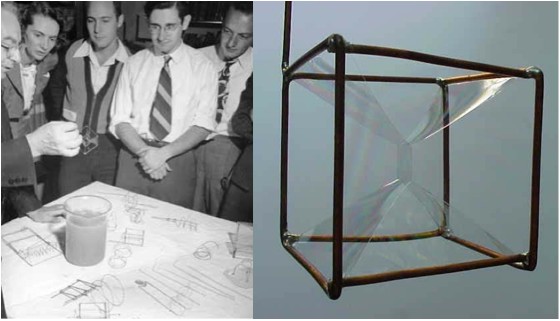

Despite the power of digital computers, it doesn’t take much looking around to find problems that can’t be efficiently done on them, but that can be done using more “natural” devices. For example, solutions to “harmonic functions with Dirichlet boundary conditions” (soap films) can be fiendishly difficult to calculate in general. The huge range of possible shapes that the solutions can take mean that often even the most reasonable computer program (capable of running in any reasonable time) will fail to find all the solutions.

So, rather than burning through miles of chalkboards and a swimming pools of coffee, you can bend wires to fit the boundary conditions, dip them in soapy water, and see what you get. One of the advantages, not generally mentioned in the literature, is that playing with bubbles is fun.

Today we’re seeing the advent of a new type of computer, the quantum computer, which is kinda-digital/kinda-analog. Using quantum mechanical properties like super-position and entanglement, quantum computers can (or would, if we can get them off the ground) solve problems that would take even very powerful normal computers a tremendously long time to solve, like integer factorization. “Long time” here means that the heat death of the universe becomes a concern. Long time.

Aside from actual computers, you can think of the universe itself, in a… sideways, philosophical sense, as doing simulations of itself that we can use to understand it. For example, one of the more common questions we get are along the lines of “how do scientists calculate the probability/energy of such-and-such chemical/nuclear reaction”. There are certainly methods to do the calculations (have Schrödinger equation, will travel), but really, if you want to get it right (and often save time), the best way to do the calculation is to let nature do it. That is, the best way to calculate atomic spectra, or how hot fire is, or how stable an isotope is, or whatever, is to go to the lab and just measure it.

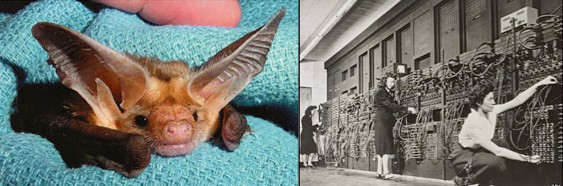

Even cooler, a lot of optimization problems can be solved by looking at the biological world. Evolution is, ideally, a process of optimization (though not always). During the early development of sonar and radar there were (still are) a number of questions about what kind of “ping” would return the greatest amount of information about the target. After a hell of a lot of effort it was found that the researchers had managed to re-create the sonar ping of several bat species. Bats are still studied as the results of what the universe has already “calculated” about optimal sonar techniques.