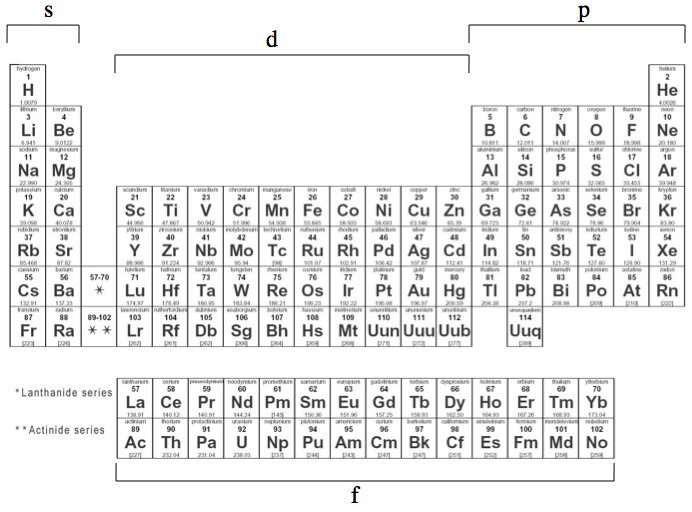

Physicist: That’s a really tricky question to answer without falling back on angular momentum. So, without getting into that:

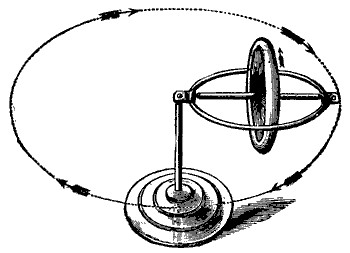

Gyroscopes, tops, and everything that spins are seriously contrarian dudes. If you try to rotate them in a direction that they’re not already turning, they’ll squirm off in another direction. When a top starts to fall over it’s being physically rotated by gravity. But rather than falling, it moves sideways, resulting in “precession“.

The gyroscope (or top or whatever) spins in one direction, gravity tries to rotate it in a second direction, but it actually ends up turning in the third direction. Gyroscopes don’t go with gravity (and fall), or even against gravity; they go sideways.

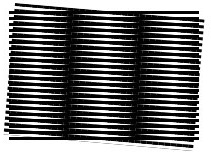

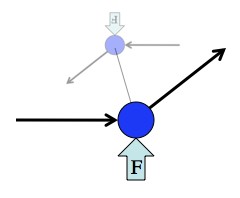

So answering this question boils down to explaining why trying to rotate a gyroscope in a direction it’s not already spinning results in it twisting in the remaining direction. To picture the mechanics behind spinning stuff, and even to derive the math behind them, physicists think of a spinning object as a bunch of tiny weights tied to each other. So rather than picturing an entire disk, just picture two weights attached to each other by a thread, spinning. The circle they trace out defines the “plane of rotation”. This stuff isn’t complicated, but it is a challenge to picture it in your head all at once, so bear with me.

When you turn something you’re applying “torque“, which is the rotational version of force. But torque is a little more complicated than plain ol’ force. In order to turn something you have to push on one side and pull on the other. When a passing weight experiences a force it changes direction. Nothing surprising.

Any spinning object can be thought of as a bunch of small weights spinning around each other. From this perspective the weird behavior of gyroscopes comes closer to making sense.

But when you apply a “push” to one of the weights and a “pull” to the other, you find that that entirely reasonable change in direction causes them both to change direction in such a way that the plane of rotation changes, in a seemingly unreasonable way.

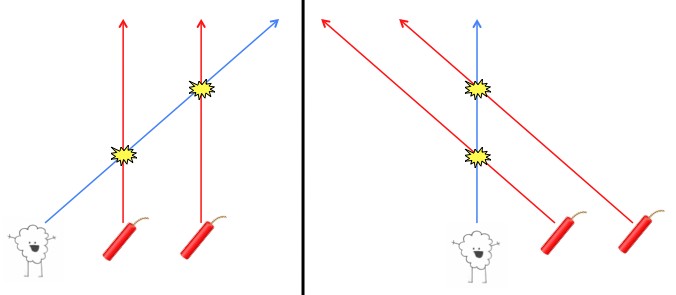

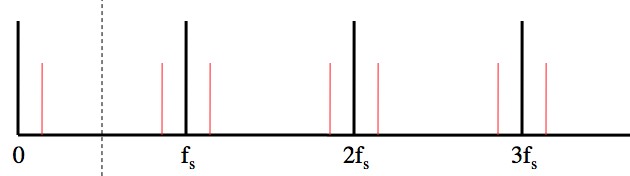

So, say you have a disk on a table in front of you (a record if you’re 30+, a CD if you’re 20 something, or a hard disk if you’re in your teens) and it’s spinning counter-clockwise. If you grab it and try to “roll it forward”, it will actually pitch left (picture above).

Harder to visualize: in the top picture of this post the gyroscope is turning forward, gravity is trying to pull it to the right, and as a result the gyroscope’s plane of rotation itself rotates.

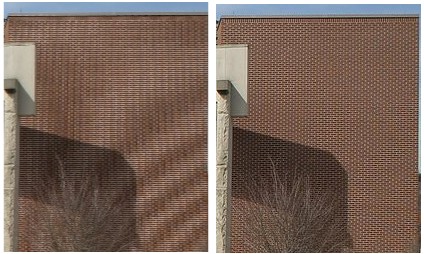

In the case of actual tops their base if free to move around, instead of just pivoting around a fixed location like in the gyroscope examples so far. It so happens that if a top starts to fall over the precession of its plane of rotation causes the top to execute a little circle, which it’s leaning into, that, if the top is spinning fast enough, tends to bring the point at its base back under the top (take a look at the gyroscope picture at the top of this post and imagine that it was free to move). The freedom to move around actually makes tops self-correcting!

On the one hand, that’s great because tops are more fun than bricks and dreidels are more interesting than dice. On the other hand, the same self-correcting effect (applied to rolling wheels) can cause bikes and motorcycles to keep going long after you’ve fallen off of them.

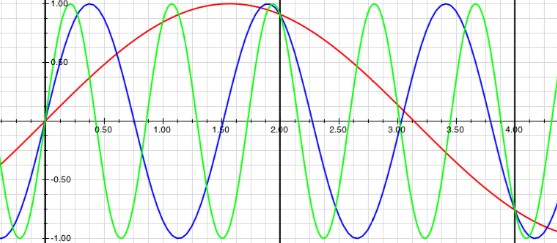

Answer gravy: The quick and dirty physics explanation is: Angular momentum is conserved and, like regular momentum, that conservation takes the form of both a quantity and a direction. For example, with regular momentum, two identical cars traveling at 60mph west have equal momentum, but if one of those two identical cars is traveling east at 60mph and the other is traveling west at 60mph, then their momentum is definitely not equal.

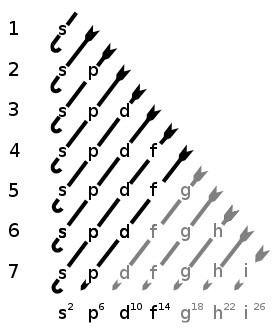

For angular momentum you define the “direction” of the angular momentum by curling the fingers of your right hand in the direction of rotation and your thumb points in the direction of the angular momentum vector (this is called the “right hand rule”). For example, the hands on a clock are spinning, and their angular momentum points into the face of the clock.

In order for a top to fall over its angular momentum needs to go from pointing vertically (either up or down, depending on which direction it’s spinning) to pointing sideways.

So, in a cheating nutshell, tops stay upright because falling over violates angular momentum. Of course, it will eventually fall over due to torque and friction. The torque (from gravity) creates a greater and greater component of angular momentum pointing horizontally, and the friction slows the top and decreases the vertical component of its angular momentum. Once the angular momentum vector (which points along the axis of rotation) is horizontal enough the sides of the top will physically touch the ground.

There are some subtleties involving the fact that the “moment of inertia” (which basically takes the place of mass in angular physics) isn’t as simple as a single number. Essentially, tops prefer to spin on a very particular axis, which makes the whole situation much easier to think about. However, for centuries creative top makes have been making tops with very strange moments of inertia that causes the tops to flip or drift between preferred axises, which makes for a pretty happening 18th century party.