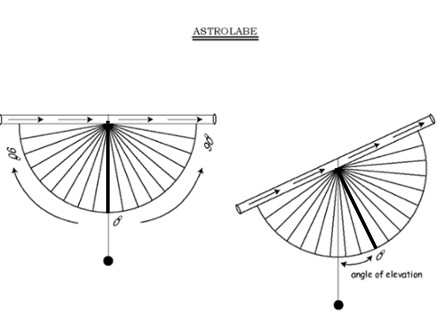

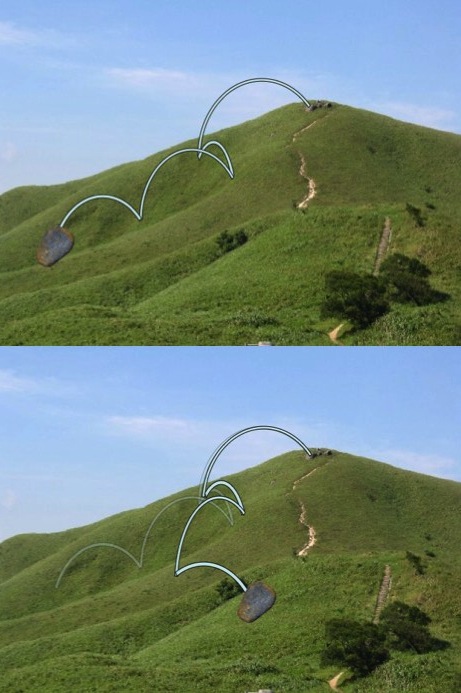

The original question was: I am a Physics teacher wanting to measure the height of a rocket. 3 measurers are standing at the corners of an equilateral triangle standing on flat ground. Each of them measures the angle from horizontal to the highest point of the flight.

In other words I want a formula giving the perpendicular height of a point above a horizontal equilateral triangle in terms of the length of side of the triangle and the 3 angles at each corner of the triangle between horizontal and line to the point. Even better would be a solution where the lengths of the sides of the triangle were not equal.

Physicist: If the rocket doesn’t arch too much, then you can find the maximum height of a rocket by just standing around in a field and measuring the largest angle between the horizon and the rocket. If somehow you knew the north-south and east-west position of the rocket at the top of its flight, then you’d only need one angle measurement. But if you don’t know what point on the ground the rocket is above, then things get difficult.

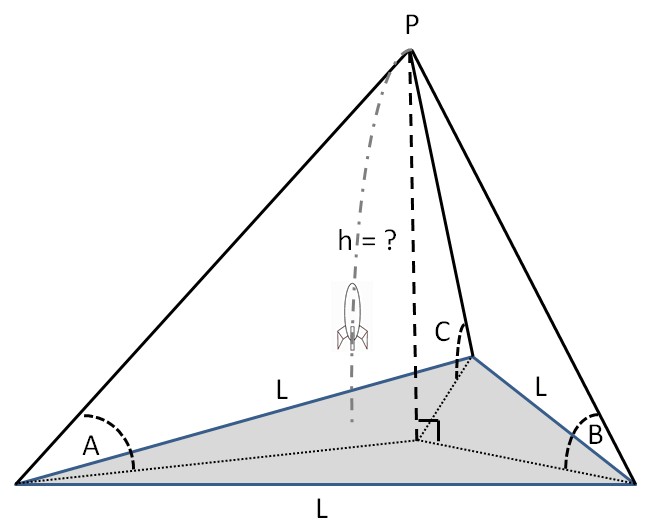

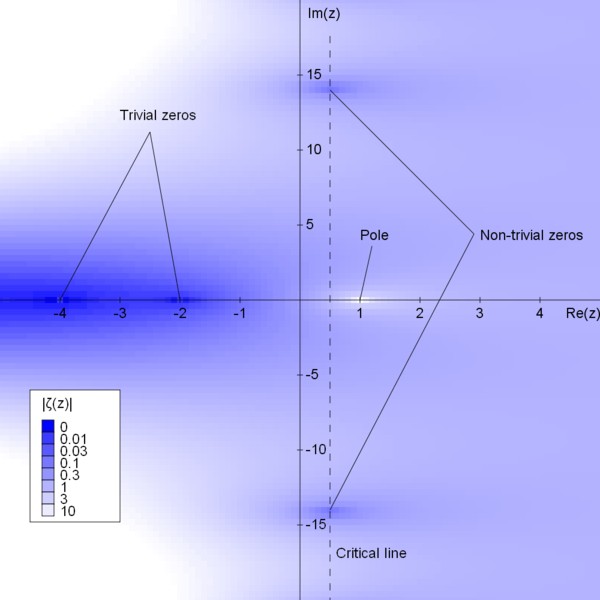

In general, if three students (or people in general) are arranged in an equilateral triangle, of side length L, and they measure angles A, B, and C, then the height of the rocket, h, is given by:

where ,

, and

.

If the bottom of that equation happens to be zero, then rather than everything breaking, you get to use an easier, special-case equation: .

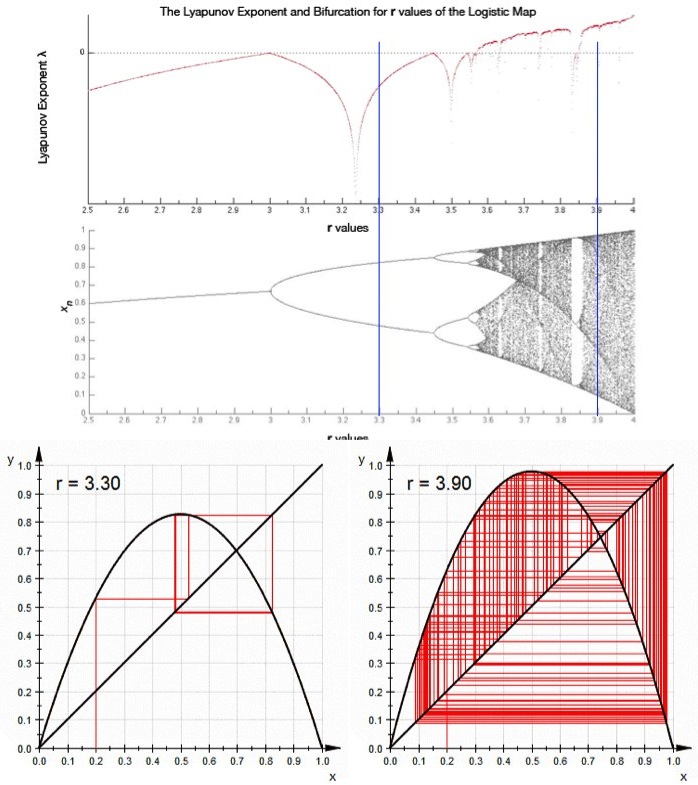

The advantage of an equilateral triangle is that it’s easy to construct: get three equal lengths of rope, have the three students each take the ends of two ropes, and then walk until the ropes are taught. The “±” does lend some uncertainty to the measurement, but unfortunately there’s no easy way around it. Three cones regularly intersect at two points (and solving for the intersection of three cones is pretty much what this is about). If you can’t disregard one of the answers as ridiculous (like one answer is 5m above the ground, but the rocket clearly went higher), then taking the average of two reasonable answers is easy enough.

Answer gravy: Here’s how you do it (with a little vector math).

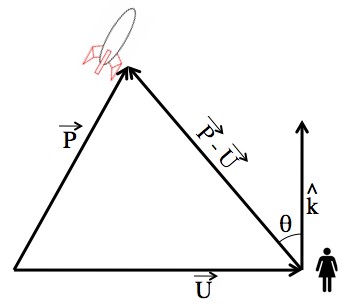

The vector that points from the end of vector to another is given by the difference between those vectors, and the angle, θ, between any two vectors can be found using the infamous dot product formula: .

Say the students are standing at positions ,

, and

. Then the direction that each student is looking when they look at the rocket is given by

,

, and

.

A mess of vectors that describe the relatively simple situation of standing on the ground and looking at a rocket, or up (k).

So, .

But, we’re really interested in the angle from horizontal, A, which is A=90°-θ. But, cos(θ) = cos(90°-A) = sin(A). Also, keep in mind that and that, since all of the students are on the ground,

.

Cleans up nice! This is just the equation for the student standing at position “U” making measurement “A”. There are two more, for “V” and “W”:

With three equations and three unknowns (P1, P2, and h) there should be enough to solve for h. You could also solve for P1 and P2, but there’s no point to it. Solving this problem for arbitrary student positions is horrifying. But, in the special case of an equilateral triangle it’s not terrible. ,

, and

. So:

After an algebra blizzard, you can finally get t0:

A little quadratic formula, and boom! You get the equation near the top of this post. By the way, in the very unlikely event that , then

.

By the way, it’s easy for errors to creep into this type of measurement, and for middle school students to get, like, way bored. To avoid both, it may help to have several students at each of the three locations to get redundant measurements.