Physicist: This was an email correspondence that was too interesting to abandon, but covered so much ground that it didn’t easily parse into just a couple of questions.

Q: What force is the ‘kinetic’ force? If 2 particles bounce of each other (can particles do that anyway?), what force carrier is exchanged to change the momentum of the particles?

A: All the force carriers carry momentum. Particles on their own don’t bump into each other, they need some kind of “mediating particle” to do the bumping for them.

Q: What is the current accepted notion of proton decay?

A: From what I gather, the decay of protons is possible in theory, but (according to theory) so unlikely during any reasonable time span that we’ll never see it.

Q: What is the chance of 4th generation particles existing (Leptons other than electrons, muons, and tauons)?

A: Maybe? They would need to have amazingly high mass to have eluded detection so far (more on that below).

Q: What is chirality and helicity? And (I believe related): What is polarization and spin?

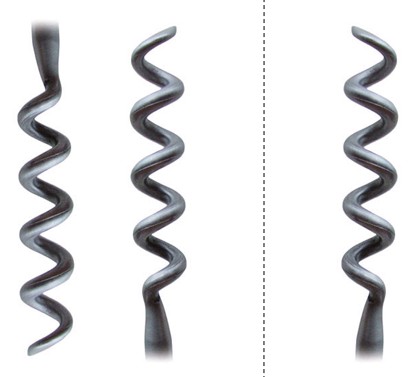

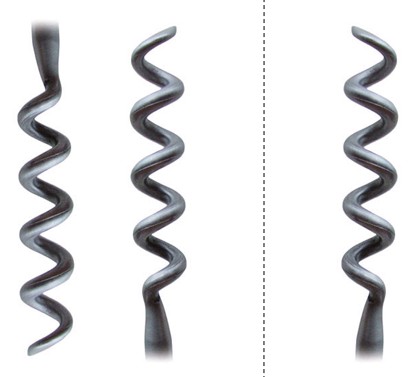

A: Chirality is essentially the “handedness” of a thing. Cork screws and DNA are great examples. You’ll notice that when you try to get a corkscrew into a cork you have to turn it in a particular direction, but if you were to turn it around (push it into a cork backward) you still turn it the same way (it helps to be holding a corkscrew for this to make sense).

If you imagine tracing the path of the corkscrews on the left with your finger, you'll notice that there's no difference between them. They always turn in the same direction, regardless of orientation. But, if you reflect the corkscrew (right), then the direction of the twist reverses. Things that switch when reflected have "chirality".

The corkscrew has a definite direction it needs to turn, regardless of its orientation. It has a particular “chirality” (or in this case “helicity”). If you look at the reflection of that same corkscrew in a mirror you’ll notice that it has the opposite chirality. To prove it, try opening a bottle of wine while looking in the mirror. Although it sounds a little like a “silence of the lambs” thing to do, you’ll notice that while your corkscrew turns one way, the reflection turns the other way.

Jame "Buffalo Bill" Gumb investigates chirality, and probably nothing else.

The chirality you hear about in particle physics and quantum field theory is usually in reference to something a bit more subtle and less interesting than corkscrews and spinning things (which are usually described as having helicity), and you have to get shoulder-deep in the math before you can reach it. Saying “according to field theory, all particles have the same chirality”, means about as much as “every gloopb is also a brotunm”. Without extensive mathematical study, it isn’t saying much.

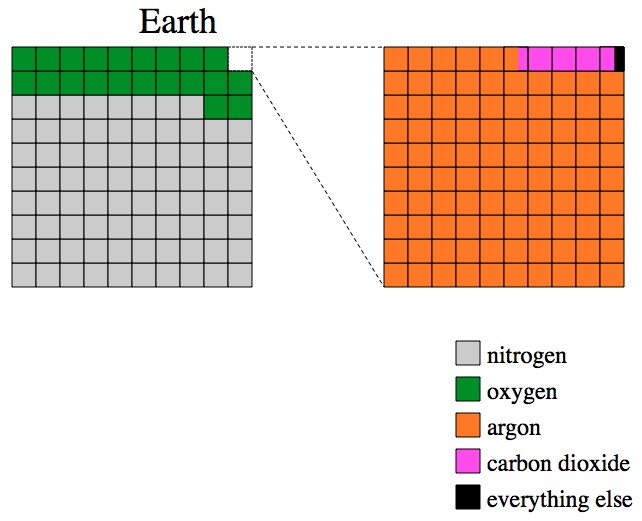

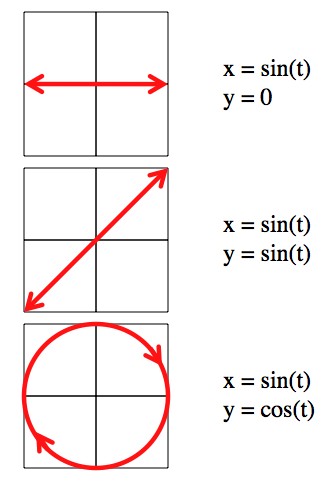

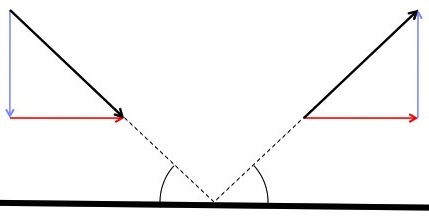

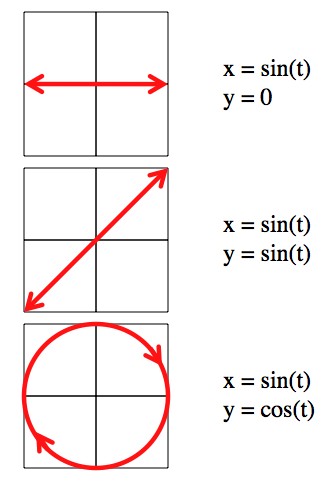

As for polarization: light is a “transverse wave“, which means it waves back and forth in a direction perpendicular to the direction it’s traveling, like a wave on a rope, instead of waving back and forth in the direction it’s moving, like sound and pressure waves.

But (in 3 dimensions) there are two directions that are perpendicular to the direction of motion. For example, if a photon is coming straight at you, the wave could be either horizontal or vertical. These options are the “linear polarizations” of the light. It can be in either polarization or (because of the weirdness of quantum mechanics) a combination of both, which includes things like diagonal polarization.

You can also describe the polarization in terms of “circular polarization”, in which one polarization “corkscrews” clockwise as the photon travels, and the other corkscrews counter-clockwise. You can describe each circular polarization in terms of a weird combination of the linear polarizations, and vice versa.

You can describe the linear polarizations in terms of waves (Sines and Cosines) in the x and y directions. By delaying one wave with respect to the other (which is really the only difference between sin and cos) you can get elliptical or circular polarizations.

This is one of those beautiful examples of the universe being horribly obtuse. You’d expect that, “in reality”, light is either linearly or circularly polarized. But all of the relevant laws can be written either way, and in physics: if it makes no difference, there may as well be no difference.

“Spin” is also sometimes called “intrinsic spin”. It is essentially the angular momentum of a particle. However, particles generally have more angular momentum that should be possible. For example, electrons have “1/2 spin”, which is a certain amount of angular momentum (specifically:  ). But, since they’re so small, they need to be rotating very quickly to have that much angular momentum. If you do the math you find that electrons, for example, need to be rotating much faster than light (which is impossible). So, spin is a property of particles that carries angular momentum, but doesn’t actually involve rotation. It’s weird stuff.

). But, since they’re so small, they need to be rotating very quickly to have that much angular momentum. If you do the math you find that electrons, for example, need to be rotating much faster than light (which is impossible). So, spin is a property of particles that carries angular momentum, but doesn’t actually involve rotation. It’s weird stuff.

For fairly obscure reasons spin can only takes values that are multiples of ½ (0, ½, 1, 3/2, …). What’s entirely cool, is that those fairly obscure reasons are based on the fact that we live in a 3-dimensional space. In two dimensions you can have particles with any spin. Particle physics, who aren’t nearly as funny as they’d have you believe, call them “anyons” (for “any spin”).

Q: So chirality is just a property some particles have for a really complicated math-y reason. Fair enough. Does that have some interesting implications?

A: With a fairly liberal definition of interesting: kinda. All particles in our universe have the same chirality, but that isn’t quite coincidental. It’s more “fun fact” than useful.

Q: What is the difference between neutrino’s and anti-neutrino’s? There was a period where they thought that all neutrino’s were massless. In that time, how could they differentiate the electron, muon and tauon neutrino? Do the heavier neutrino’s generally move slower or do they have more energy which results in roughly the same speed?

Quick aside: Electrons are members of a particle family called “Leptons”. The leptons include electrons (lightest and stable), muons, and tauons (both are far heavier, and unstable). In addition, all three have an associated “neutrino”, and all six of them (the electrons, muons, tauons, and each of their neutrinos) have an anti-particle twin. That’s 12 particles total. Neutrinos are very difficult to study because they just barely interact with ordinary matter.

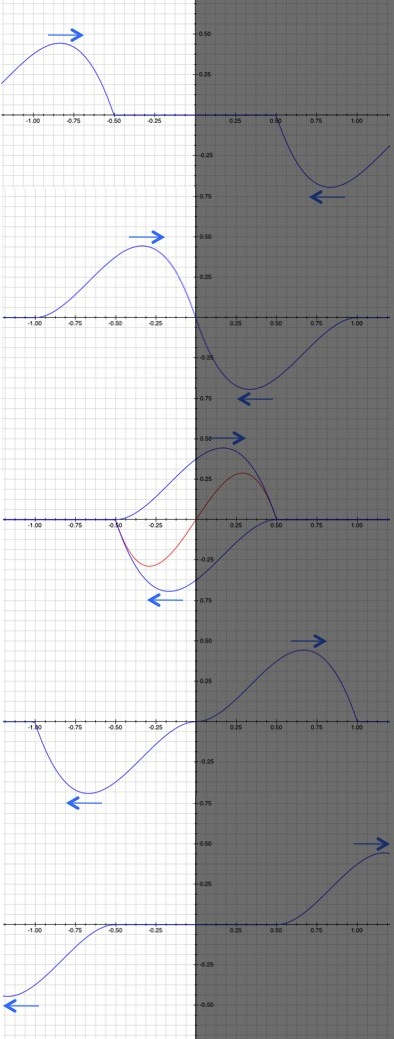

A: They always seem to travel at light speed, or more likely: really close to light speed. They’re light-weight enough that a tiny bump really sends them flying. It turns out that the different neutrinos are all different states of what is essentially the same particle (kinda like how the different polarizations of a photon are different states of the same particle). As a neutrino propagates through space it actually changes from one type to the other to the other and back. At least, as time goes on the chance of the neutrino being discovered to be either electron, muon, or tauon changes. This is (in a handwavy way) due to each state having a different frequency, which in turn is due to the different states having different masses. The difference between neutrinos and anti-neutrinos is exactly the difference between matter and anti-matter.

You differentiate between them during the (extremely rare) interaction events. To conserve “lepton flavor” an interaction that destroys a muon neutrino must create a muon, and one that destroys an anti-electron-neutrino must generate an anti-electron, and so on. Basically, you suddenly see the appropriate particle produced or destroyed.

Q: But, when they first discovered neutrino’s and didn’t know there were different types how could they still expect different types being produced? Especially since they didn’t have any differing characteristics between the different neutrino’s. So how did they just ‘know’ that the particle created when a muon or tauon decayed wasn’t an electron-neutrino?

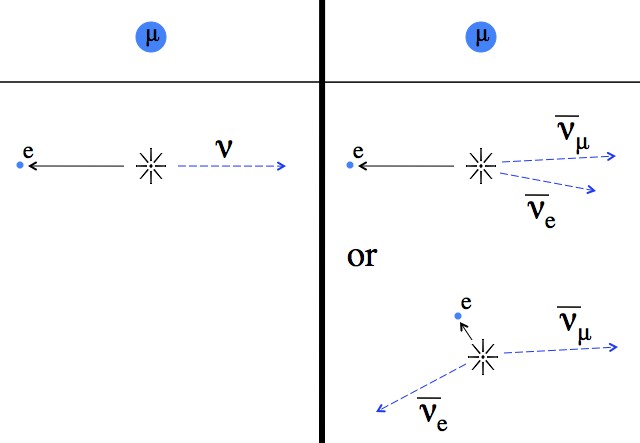

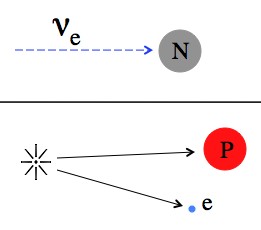

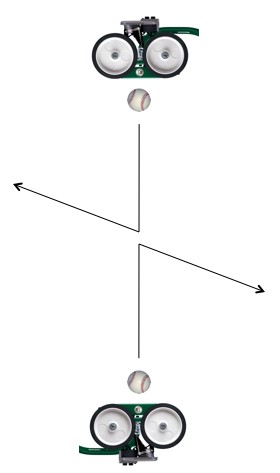

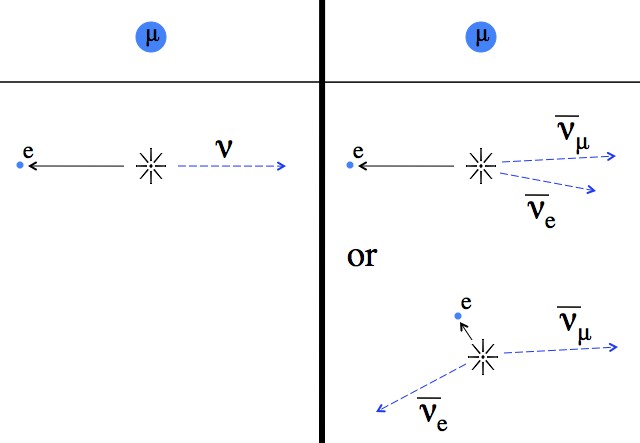

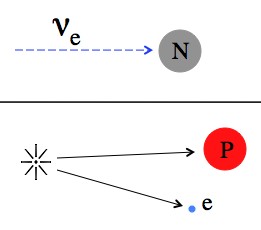

A: When new particles are produced they’re flung off with random directions and momenta, contingent on the total momentum of the system being conserved (so not completely random). During neutron decay (also called beta decay) we see the resulting proton and electron flying off in such a way that it was very likely that there was only one new particle being produced (now known to be the anti-electron-neutrino). But during muon decay we see the resulting electron flying off in a manner consistent with the rapid production of two particles (anti-electron and anti-muon neutrinos). In the picture below the  means “anti-“.

means “anti-“.

Muon decay: a stationary muon suddenly decays into an electron and neutrinos. Left: if hypothetically, during muon decay, only one neutrino were created then since the total energy released is always the same and momentum always needs to be balanced, the electron (the only thing we see) would always be ejected at the same speed. Right: in practice we see the newly created electron ejected at a wide variety of speeds. It takes a bit of analysis, but what we see is consistent with the creation of two unseen particles.

More than that, the extremely long lifetime of the muon (about a microsecond) implied the existence of an unknown conserved quantity (lepton flavor). “Conserved quantities” force particles to maintain a variety of balances and to “carefully consider everything” before decaying. Basically, more things have to fall into place for a decay to happen. That increases the half-life.

More than that, after extensive calculations the total neutrino generation rate of the Sun’s core was (accurately) estimated, but when we started measuring the solar neutrinos we found that we were only finding about one third of what we should have (the neutrinos evolve from one form to another, and by the time we see them they’re thoroughly scrambled up). This (among other similar experiments) is good evidence that we won’t find a fourth lepton.

Using nothing more than: neutrinos, an array of gigantic detectors filled with the purest water in the known universe, and more computing power than most countries, this image of the neutrino-producing core of the Sun was created.

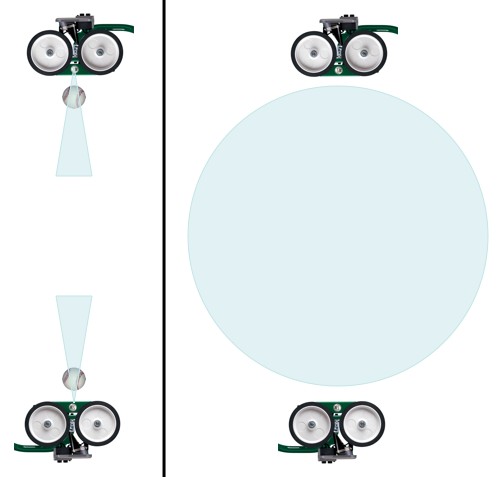

A similar, more controlled, experiment involves creating the neutrinos ourselves. Accelerate protons up to huge energies and then slam them into a brick of something solid (steel or tungsten or something). That sudden splash of energy tends to generate pions (“pie ons”) that in turn tend to immediately decay into muons and muon-neutrinos (both “anti” and “normal”).

Muon-neutrino generator (particle accelerator not shown).

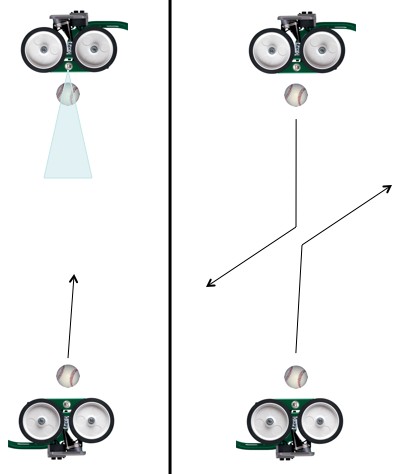

Neutrinos, being basically ghost particles, pass right out of the lab no problem, while every other kind of particle is left behind. You can then set up another lab miles away (and generally deep underground) to detect the neutrinos produced (for example, the Super-K). These detectors are essentially huge water tanks surrounded by very, very sensitive cameras.

Electron-neutrinos (for example) can be absorbed by neutrons to induce beta decay. This is rare (60 light years of lead can block about 50% of a neutrino beam), but if you’ve got an accelerator pumping out neutrinos, then you can catch a few.

"Induced beta decay". An electron-neutrino can cause a neutron to turn into a proton and an electron. A muon neutrino would create a proton and a muon. The anti-neutrinos can induce a reverse-beta-decay, where a proton is turned into a neutron and anti-electron (or anti-muon or anti-tauon).

Since the neutrinos were produced from an extremely high energy reaction they carry quite a punch. The particles resulting from the interaction move fast enough through the water in the detector that they form “Cherenkov radiation”, which is essentially a “sonic boom made of light” (or, the result of it at least). By looking at how the “boom” hits the light sensors around the detector you can get energy and direction information about the original particle.

Q: Are force-carrying particles launched in random directions at random times or have they got a higher change to be produced if another particle they can react with is close? If they are randomly produced, it would mean that there is a chance that, for instance, a gluon could travel really far before reaching something to react with, right?

A: The force carrying particles are almost always “virtual particles“. Statements about when they’re emitted, and where they are, and why they’re emitted, are all a little senseless. Virtual particle interactions necessarily involve super-positions of many states of the particles, including states where the particles don’t exist at all.

The force carries can’t do anything to a particle unless there’s another particle around to do the opposite (i.e., the virtual photons produced by an electron can’t push it around unless there’s another charged particle that can move in the opposite direction and conserve momentum). Unfortunately, this is another example (like the double slit, or entanglement, or whatever) of how quantum mechanics stomps all over causality. Does the force carrier just happen to push the other particle because it got in the way, or was the force carrier only present because there was another particle to be pushed? The answer to both questions is; yes…ish.

Q: Why can’t quarks exist separately, but only in pairs/triplets. Why don’t gluons travel very far? Why is it that gluons can keep quarks inside of protons and neutrons and they can also keep protons and neutrons together in a nuclear core?

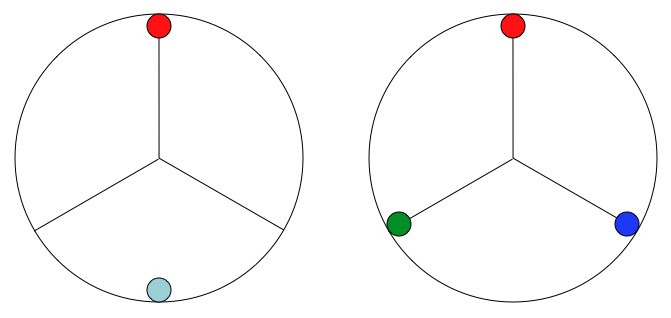

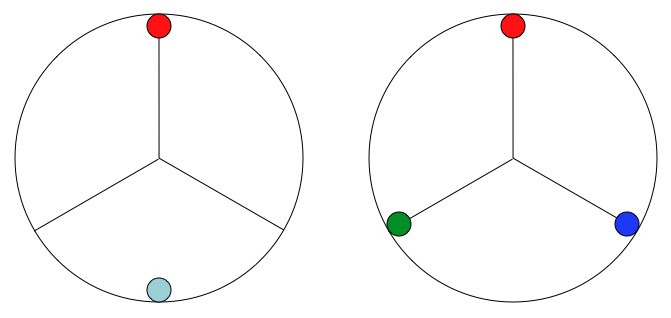

A: The short, annoying answer that I usually hear, is that the energy required to separate quarks is enough to create enough new quarks that none of them are left alone. The more technical answer is the quark interactions have to preserve “color”. In physics we’ve got all kinds of conserved quantities: energy, momentum, baryon number, lepton flavor, color, etc. In any arrangement of quarks the “color” has to be zero. As in “red and anti-red” or “red, blue, and green”. Overall, the net color has to cancel. Of course, that’s not an explanation so much as a statement of fact. But as for why? Who knows. It’s just another conserved quantity.

Every particle has neutral "color", by "balancing the color wheel". There isn't any actual color going on, it's more a useful mnemonic for keeping track of things.

Gluons are extremely massive particles. In order to be created (generally) the energy required is much higher than the energy present, which means that in order to exist the gluons have to “borrow against the uncertainty principle”. However, having an energy uncertainty that high means having a time uncertainty that’s small. Small enough, in fact, that these gluons don’t have time to cross a nucleus before they blink out again.

Q: What is group theory and why is it related to quantum physics so much?

A: Group theory is an extremely generalized way of talking about sets of things, and the behaviors of those things. For example, the orientation of a particle is a set (of all the different ways it can be aligned), and all the ways you can rotate it are the “group actions” on the set of orientations. The age of intuitive physics ended at the beginning of the 20th century (relativity and quantum mech), and since we can’t use intuition to predict the behaviors of quantum mechanical things, we use group theory.

Group theory can be used in traditional physics, it’s just that it’s a little complicated, and unnecessary (the behavior of traditional physics is fairly intuitive). In quantum mech, there really aren’t options. For example, if you rotate a chair (or anything else) through 360° it returns to where it started. You can describe that using group theory, but needn’t bother. I mean, it’s a chair, how much math do you need? An electron, on the other hand, has to rotate through 720° to return to its original position. That’s really impossible to talk about normally (sober), so group theory is what you’re left to work with.

Q: And finally: Feynman mentions that there were attempts in his time to combine QED (quantum electro-dynamics) and the weak force into a single theory. How is that going right now?

A: Good! It’s the called “electro-weak theory”, and it’s now a big part of the “standard model”. This is a bit over-simple, but in QED there’s a pretty natural place to plug in a mass term. The photon (the force carrying particle for electromagnetism) has no mass, but the force carriers for the weak force (the Z and W bosons) do. Once you plug in the mass, and do some surprisingly nasty math, the weak force falls right out. In fact, if you were so inclined, you could describe the photon and Z boson as two states (a massive high-energy state, and a massless low-energy state) of the same particle!