The original question was: Assuming you could avoid any other of the effects of being in an active particle accelerator tube, How much damage would you expect by the particles smashing into you? How much would the amount of mass within the particles effect your chances of living/dying? And if you survived the impact, what kind of havoc would a mini black hole wreak inside you?

Physicist: If you took all of the matter that’s being flung around inside an active accelerator, and collected it into a pellet, it would be so small and light you’d never notice it. The danger is the energy.

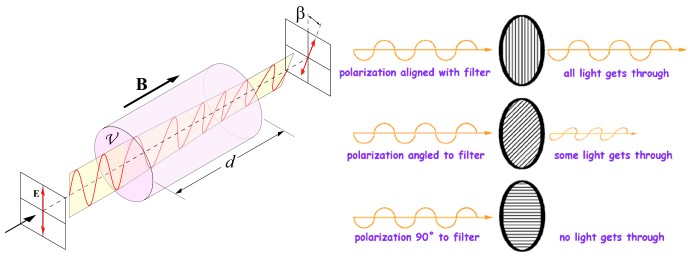

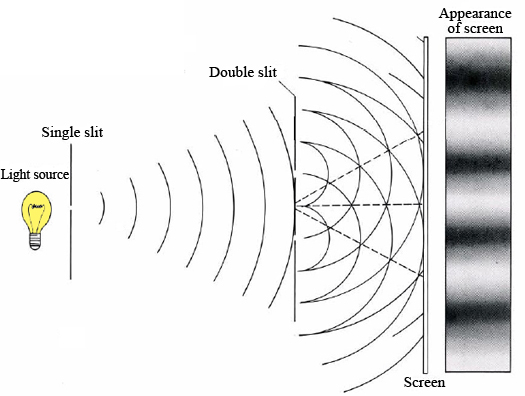

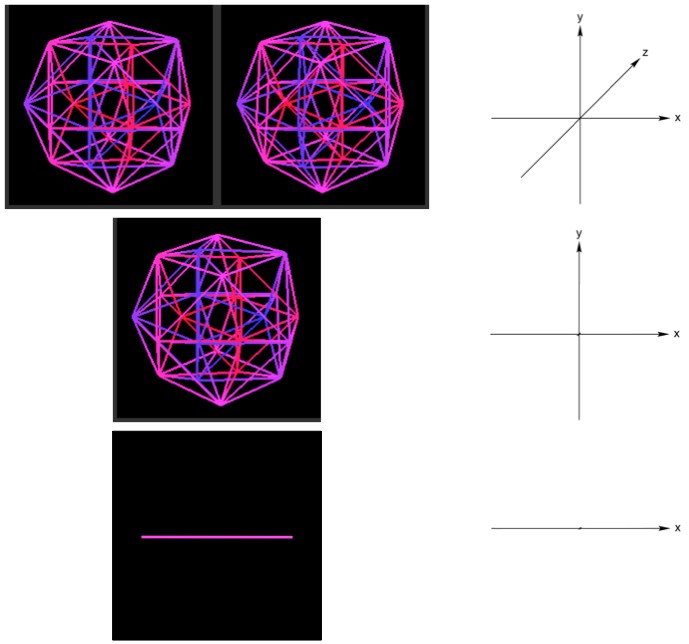

If you stood in front of the beam you would end up with a very sharp, very thin line of ultra-irradiated dead tissue going through your body. It might possibly drill a hole through you. You may also be the first person in history to get pion (“pie on”) radiation poisoning (which does the same thing as regular radiation poisoning, but with pions!).

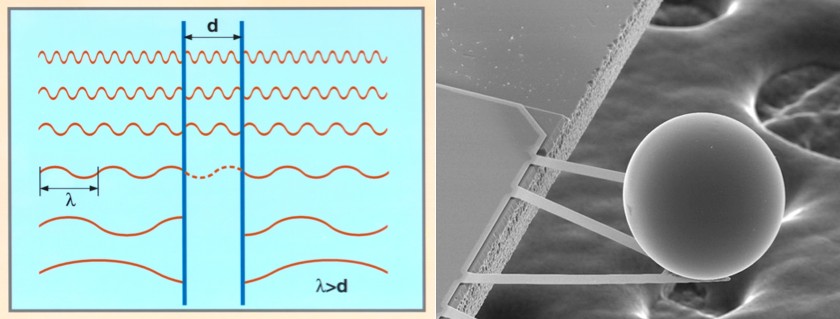

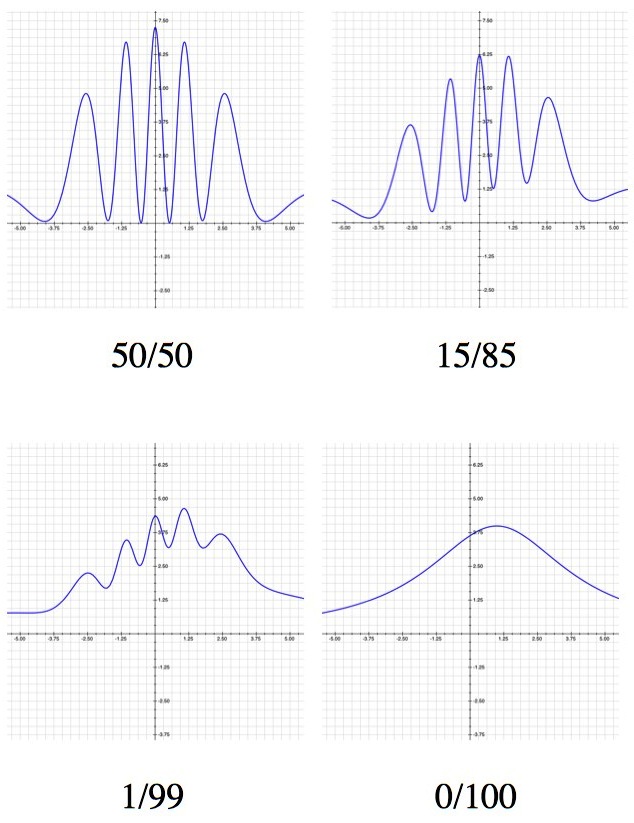

When it’s up and running, there’s enough energy in the LHC beam to flash boil a fair chunk of person (around 10-100 pounds, depending on the setting of the accelerator). However, even standing in the beam, most of that energy will pass right through you. The higher the kinetic energy of a particle, the smaller the fraction of its energy tends to be deposited. Instead, high energy particles tend to glance off of other particles. They deposit more overall than a low energy counterpart, but most of their energy is carried away in a (slightly) new direction.

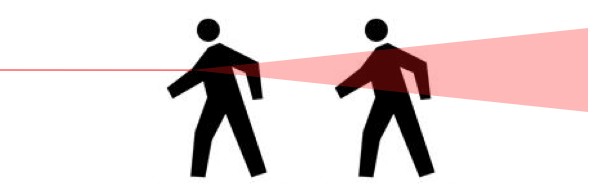

So instead of all the energy going into your body, the beam would glance off of atoms in your body, causing the beam to widen, and most of the energy would be deposited in whatever’s behind you (the accelerator only holds a very thin beam, so any widening will cause the beam to hit the walls).

CERN's motto "CERN: Don't be a hero", is in reference to the fact that if you see someone in the beam, stepping in front of them just makes things worse.

If the LHC ever manages to create a micro black hole, that micro black hole should “pop” immediately after being created. The smaller a black hole, the faster it loses mass and energy to Hawking radiation. And a minimum-mass black hole radiates so fast that it’s easier to describe as an explosion (small explosion). Most models predict that the LHC won’t come remotely close to creating a minimum-mass black hole, but one or two of the farther fringes of string theory say “maybe”. If the LHC does create a tiny black hole, it would deposit all of the energy of two TeV particles slamming together (which has all the fury of an angry ant stomping) in the form of a burst of radiation.

Update (6/27/2011): A concerned reader pointed out that there is at least one known particle-beam-accident. A Russian nuclear scientist named Anatoli Bugorski, who at the time was working through his PhD at the U-70 synchrotron (which has approximately 1% of the LHC’s maximum power), was kind enough to accidentally put his head in the path of a proton beam.

He’s doing pretty well these days (considering). The damage took the form of a thin strip of intense radiation damage, that killed all the tissue in a straight line through the left side of his head. Despite the fact that that line passed through a lot of brain, he still managed to finish his PhD.