Physicist: The 2nd law of thermodynamics states that in any closed system entropy will increase over time. The exact rate at which entropy increases is situation dependent (e.g., being on fire or not).

As a quick aside, one of my favorite Creationist (pardon, “Intelligent Design”) arguments uses the second law. That is, a living Human body has far less entropy than an equivalent amount of (most) inorganic matter, so how could living things have come from non-living things? Well, that’s a stunningly hard question, and we’re working on it. Patience. However, entropy isn’t a problem here, because the system of the biosphere is not closed. We get a constant supply of low-entropy visible light from the Sun, and the Earth in turn sprays out a hell of a lot of high-entropy infra-red light. For every one photon we get from the Sun we re-emit about twenty randomly into space. That huge entropy sink is more than enough to offset all life, and a lot more.

The Bowhead Whale and the Galápagos Tortoise: two species lucky enough to live for a couple hundred years.

Back to the point. Is the long, grinding, inevitable decay of the body inevitable in theory as well as in practice? Nope.

Nothing survives the heat death of the universe of course, but there’s strong evidence that, if the environment stayed more or less the way it is today, then something “Human-ish” could live (maybe) indefinitely. Single-celled organisms never die of old age, they either die for environmental reasons or tiny murder. Instead of dying when they get old, they split in half and each half then grows to full size and repeats the process. In a very literal sense, we’re all just different parts of the same, still-living, ancient primordial life form (much love, Chopra).

There are (very) living examples of creatures today that just don’t die on their own, such as the Turritopsis Nutricula jellyfish (which has been shown to indefinitely cycle between it’s adult and adolescent forms) and maybe (but probably not), the Hydra genus. The point is; dying of old age is not a written-in-stone requirement for life.

The hydra which might be immortal, and the Turritopsis nutricula jellyfish which almost certainly is.

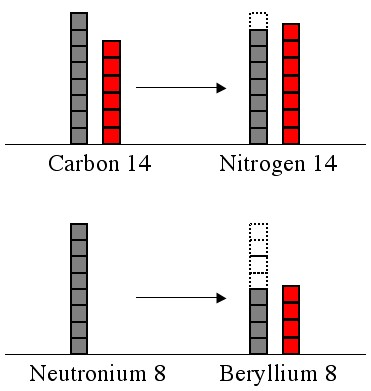

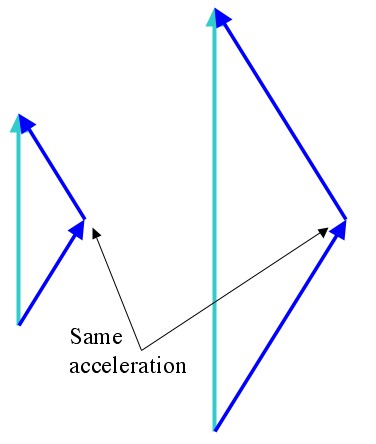

So, things don’t grow old and die due to entropy (strictly). The effect of entropy seems to take the form of the accumulation of injuries, toxins, parasites, mutations, and general wear and tear. The “choice” that a species has to make is between fixing bodies as they accrue damage, or shitcanning them and starting over. By “shitcan and start over” I mean put a lot of energy into perfectly maintaining a few hundred cells (the “germ line“) and fixing most of the damage throughout the rest of the body. New creatures that grow out of the germ line (babies) start with a damage-free blank slate.

Also; bonus! Dying of old age helps clear the way for evolution to do it’s thing. The young (and slightly different) merely have to compete with each other, instead of well established and ancient creatures. Without natural death Earth might be home to nothing more interesting than mold (which is boring).

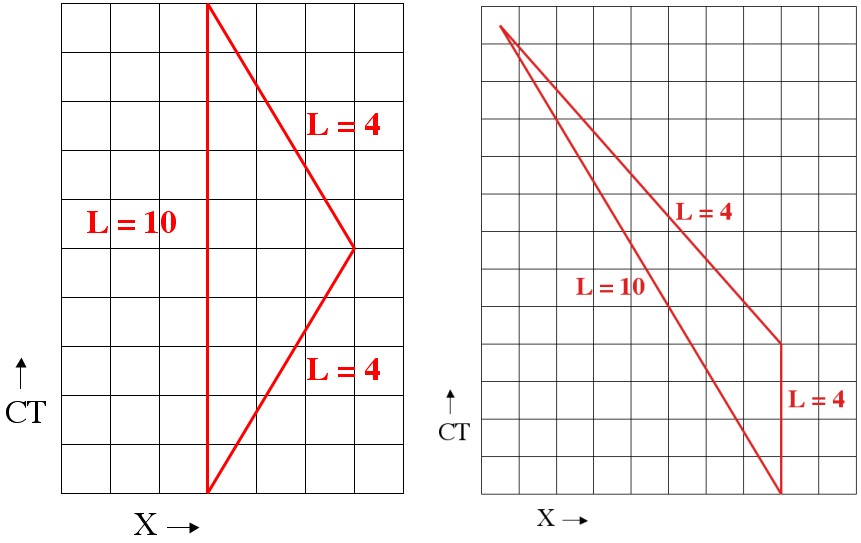

Now consider this: the statement that “entropy always increases” is just a fancy way of saying that “the world tends toward the most likely/stable end” or “the world tends to be in a state that has the most ways of happening”. In this case, there are a lot more ways to be dead than alive. As a result, you may have noticed that there are plenty of ways to accidentally die, but really just the one way to accidentally come to life. Statisticians (being weird and morbid) have figured out that if Humans were biologically immortal the average lifespan would be around 600-700 years. It takes about that long to slip in the shower or something (statistically).