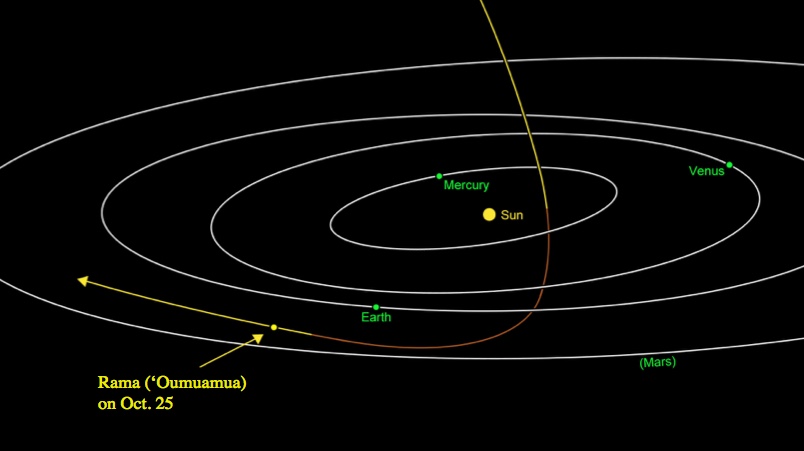

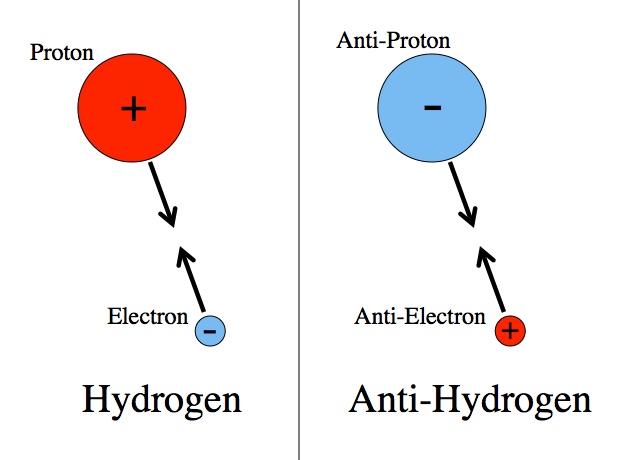

Physicist: Anti-matter is exactly the same as ordinary matter but opposite, in very much the same way that a left hand is exactly the same as a right hand… but opposite. Every anti-particle has exactly the same mass as their regular-particle counterparts, but with a bunch of their other characteristics flipped. For example, protons have an electric charge of +1 and a baryon number of +1. Anti-protons have an electric charge of -1 and a baryon number -1. The positive/negativeness of these numbers are irrelevant. A lot like left and right hands, the only thing that’s important about positive charges is that they’re the opposite of negative charges.

Hydrogen is stable because its constituent particles have opposite charges and opposites attract. Anti-hydrogen is stable for exactly the same reason.

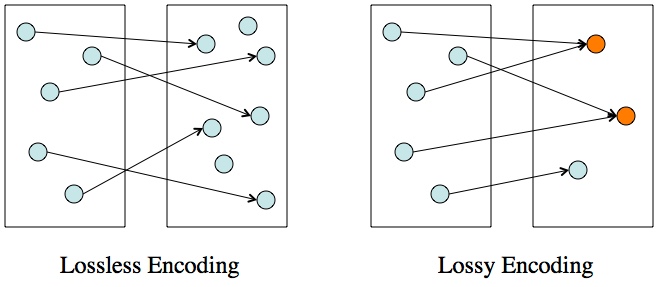

Anti-matter acts, in (nearly) every way we can determine, exactly like matter. Light (which doesn’t have an anti-particle) interacts with one in exactly the same way as the other, so there’s no way to just look at something and know which is which. The one and only exception we’ve found so far is beta decay. In beta decay a neutron fires a new electron out of its “south pole”, whereas an anti-neutron fires an anti-electron out of its “north pole”. This is exactly the difference between left and right hands. Not a big deal.

Left: A photograph of an actual flower made of regular matter. Right: An artistic representation of a flower made of anti-matter.

So when we look out into the universe and see stars and galaxies, there’s no way to tell which matter camp, regular or anti, that they fall into. Anti-stars would move and orbit the same way and produce light in exactly the same way as ordinary stars. Like the sign of electrical charge or the handedness of hands, the nature of matter and anti-matter are indistinguishable until you compare them.

But you have to be careful when you do, because when a particle comes into contact with its corresponding anti-particle, the two cancel out and dump all of their matter into energy (usually lots of light). If you were to casually grab hold of 1 kg of anti-matter, it (along with 1 kg of you) would release about the same amount of energy as the largest nuclear detonation in history.

The Tsar Bomba from 100 miles away. This is what 2 kg worth of energy can do (when released all at once).

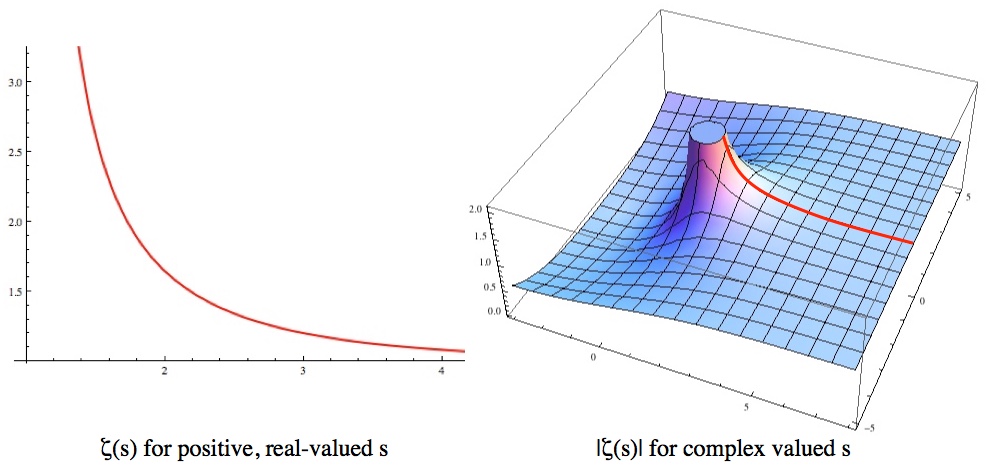

To figure out exactly how much energy is tied up in matter (either kind), just use the little known relation between energy and matter: E=mc2. When you do, be sure to use standard units (kilograms for mass, meters and seconds for the speed of light, and Joules for energy) so that you don’t have to sweat the unit conversions. For 2 kg of matter, E = (2 kg)(3×108 m/s)2 = 1.8×1017 J.

When anti-matter and matter collide it’s hard to miss. We can’t tell whether a particular chunk of stuff is matter or anti-matter just by looking at it, but because we don’t regularly see stupendous space kablooies as nebulae collide with anti-nebulae, we can be sure that (at least in the observable universe) everything we see is regular matter. Or damn near everything. Our universe is a seriously unfriendly place for anti-matter.

So why would we even suspect that anti-matter exists? First, when you re-write Schrödinger’s equation (an excellent way to describe particles and whatnot) to make sense in the context of relativity (the fundamental nature of spacetime) you find that the equation that falls out has two solutions; a sort of left and right form for most kinds of particles (matter and anti-matter). Second, and more importantly, we can actually make anti-matter.

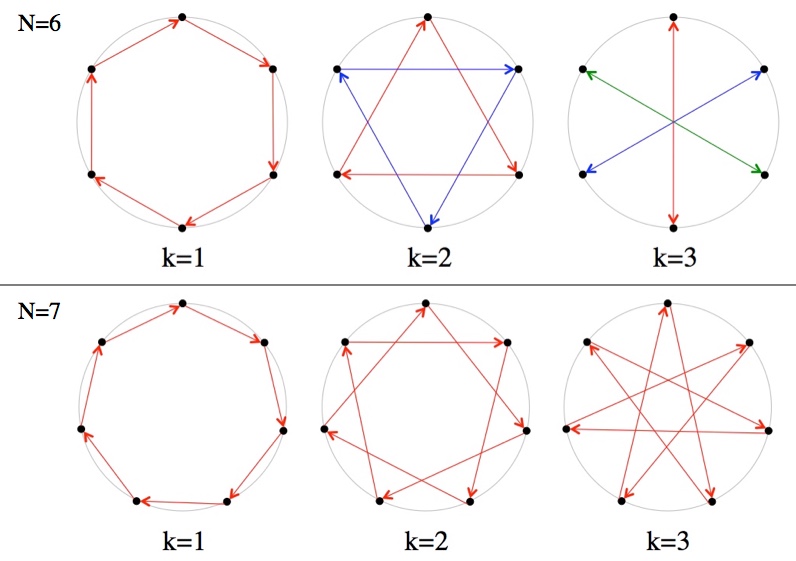

Very high energy situations, like those in particle accelerators, randomly generate new particles. But these new particles are always produced in balanced pairs; for every new proton (for example) there’s a new anti-proton. The nice thing about protons is that they have a charge and can be pushed around with magnets. Conveniently, anti-protons have the opposite charge and are pushed in the opposite direction by magnets. So, with tremendous cleverness and care, the shrapnel of high speed particle collisions can be collected and sorted. We can collect around a hundred million anti-particles at a time using particle accelerators (to create them) and particle decelerators (to stop and store them).

Anti-matter, it’s worth mentioning, is (presently) an absurd thing to build a weapon with. Considering that it takes the energy of a small town to run a decent particle accelerator, and that a mere hundred million anti-protons have all the destructive power of a single drop of rain, it’s just easier to throw a brick or something.

The highest energy particle interactions we can witness happen in the upper atmosphere; to see them we just have to be patient. The “Oh My God Particle” arrived from deep space with around ninety million times the energy of the particle beams in CERN, but we only see such ultra-high energy particles every few months and from dozens of miles away. We bothered to build CERN so we could see (comparatively feeble) particle collisions at our leisure and from really close up.

Those upper atmosphere collisions produce both matter and anti-matter, some tiny fraction of which ends up caught in the Van Allen radiation belts by the Earth’s magnetic field. In all, there are a few nanograms of anti-matter up there. Presumably, every planet and star with a sufficient and stable magnetic field has a tiny, tiny amount of anti-matter in orbit just like we do. So if you’re looking for all natural anti-matter, that’s the place to look.

But if anti-matter and matter are always created in equal amounts, and there’s no real difference between them (other than being different from each other), then why is all of the matter in the universe regular matter?

No one knows. It’s a total mystery. Isn’t that exciting? Baryon asymmetry is a wide open question and, not for lack of trying, we’ve got nothing.

The rose photo is from here.

Update: A commenter kindly pointed out that a little anti-matter is also produced during solar flares (which are definitively high-energy) and streams away from the Sun in solar wind.